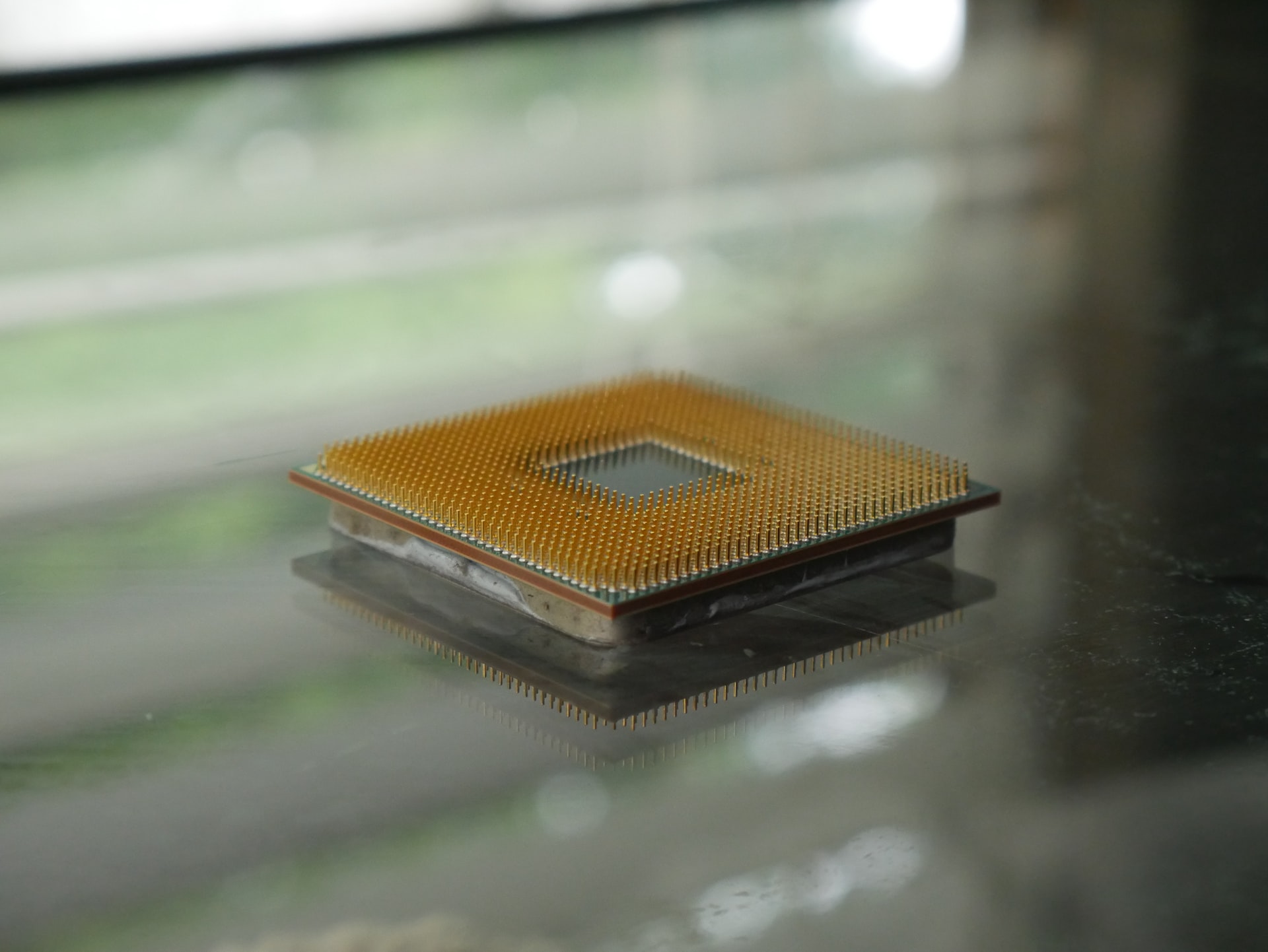

Photo by Devin Spell on Unsplash

THE IMPORTANCE OF OUTSOURCED SEMICONDUCTOR ASSEMBLY AND TEST

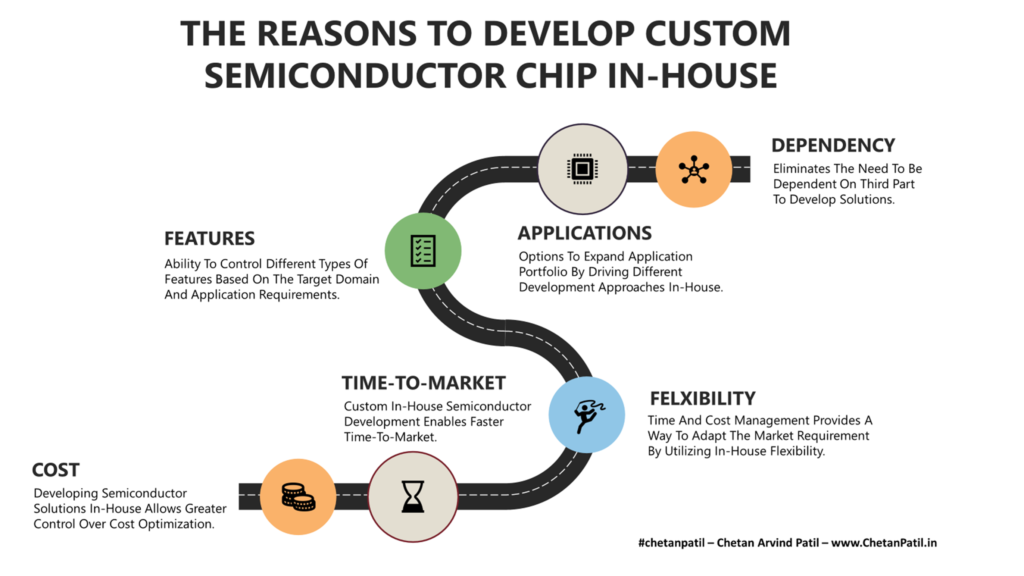

Outsourcing is one of the several ways to optimize in-house business activities, and that is why the majority of the industry heavily takes advantage of outsourcing. In the long run, hiring an external vendor to outsource part of the product development process not only brings operational efficiency but also provides an avenue to optimize internal resources.

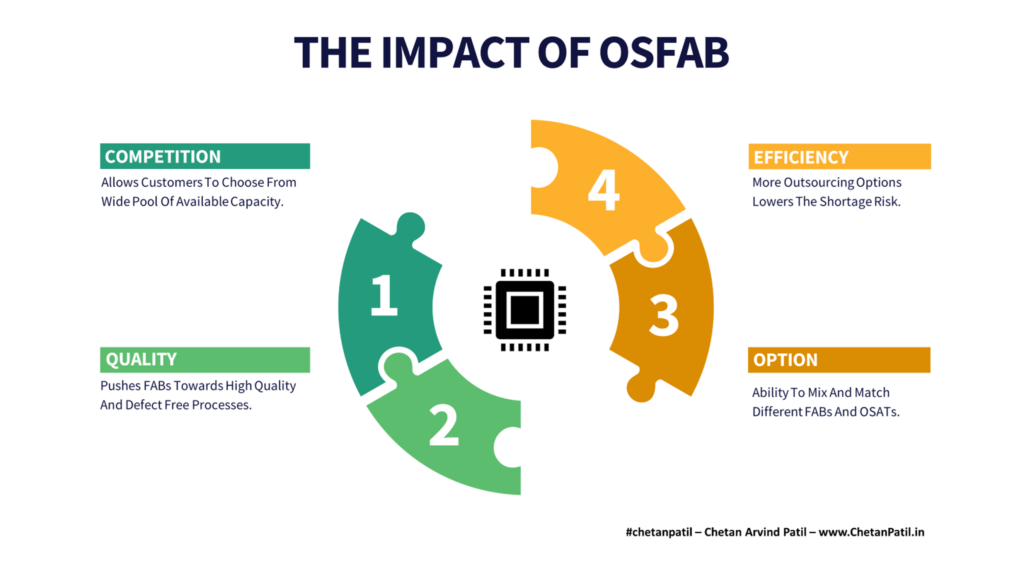

The semiconductor industry is also heavily driven by outsourcing. More than the design, the manufacturing aspect of the semiconductor product development relies on services provided by external vendors. The two major examples of semiconductor outsourcing are FABs (Pure-Play Foundries) and OSATs.

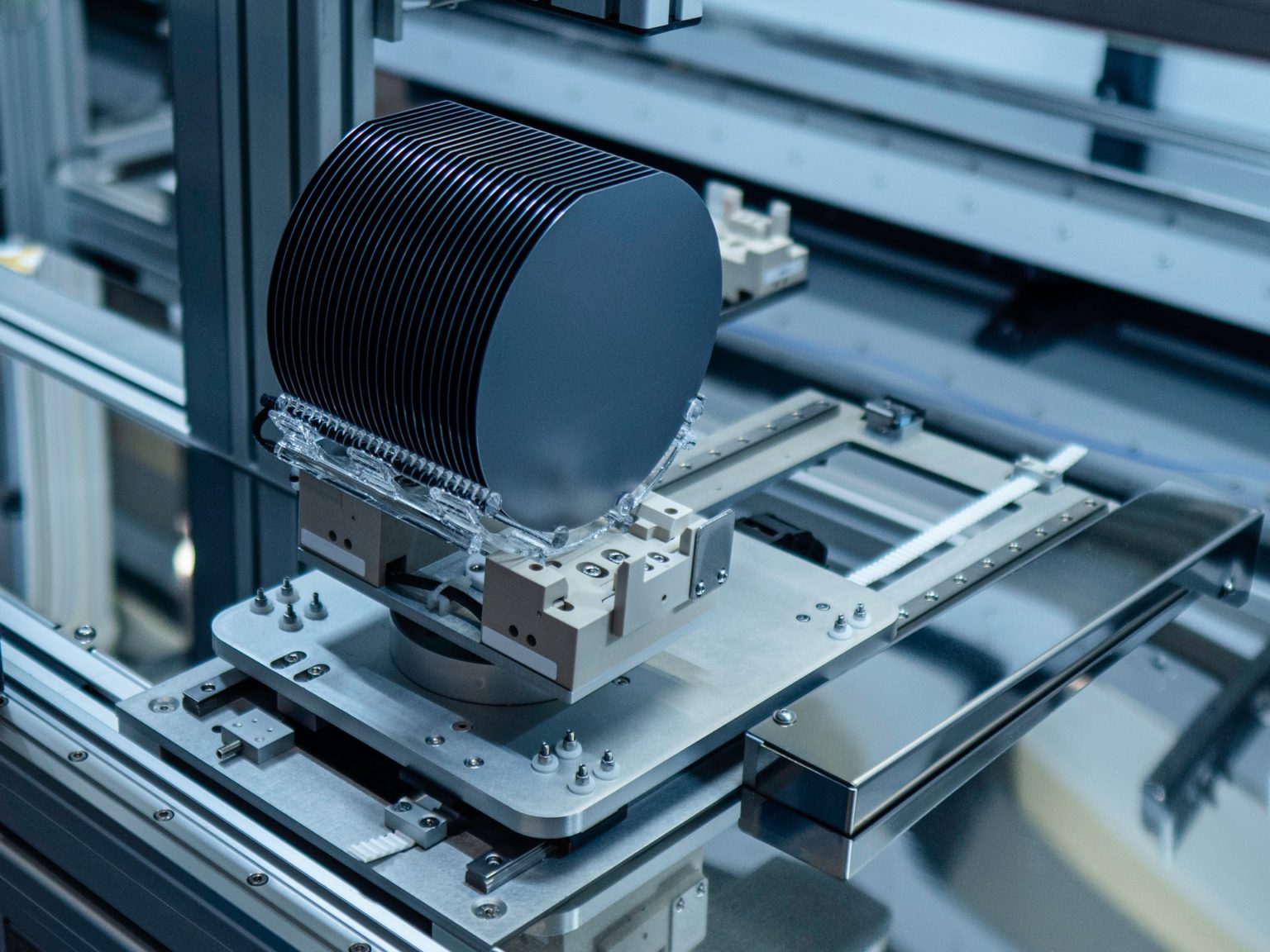

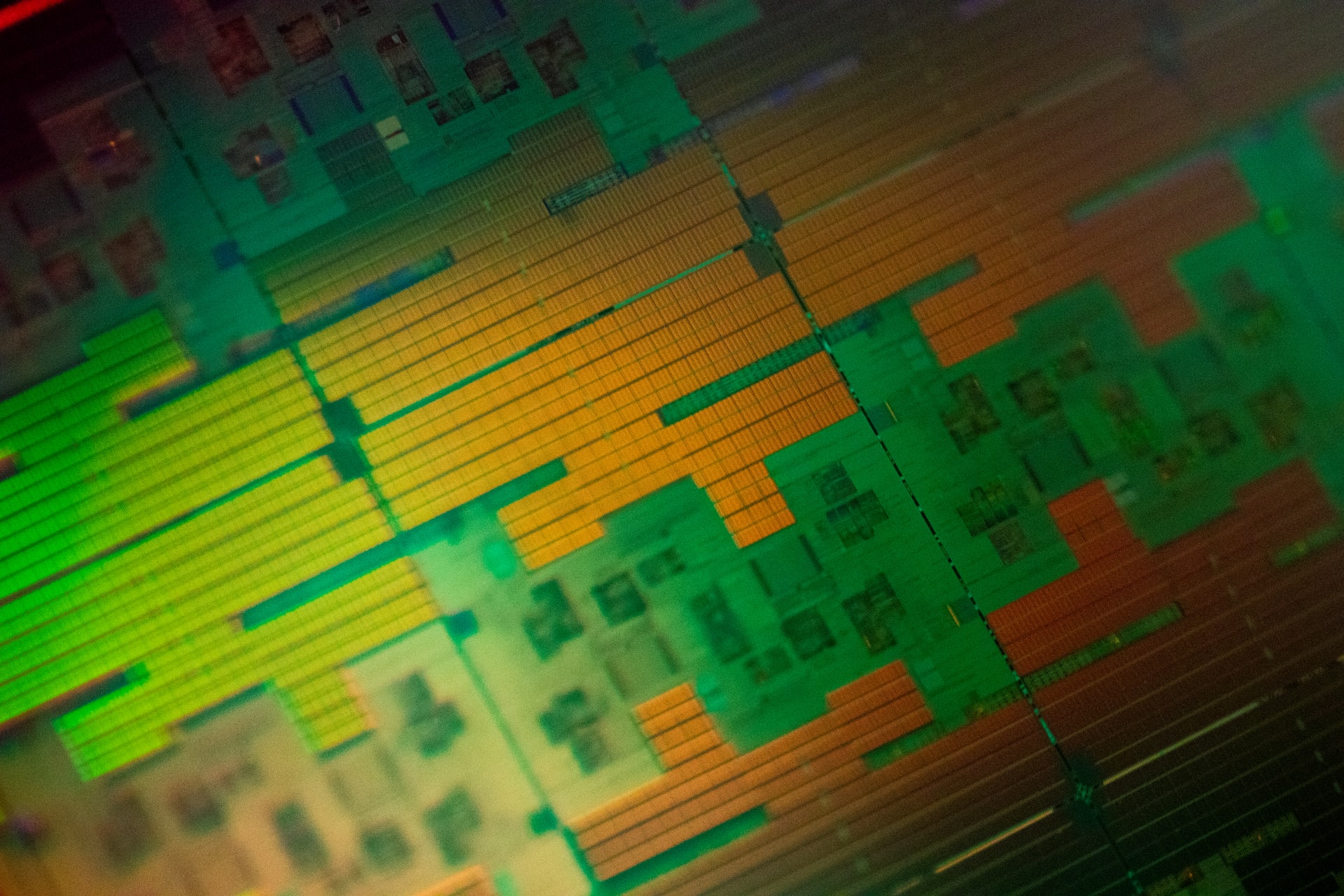

Pure-Play Foundries: Provides services that transform the design files into real silicon wafers.

OSATs: Takes the silicon wafers fabricated and puts them through the testing process before assembling.

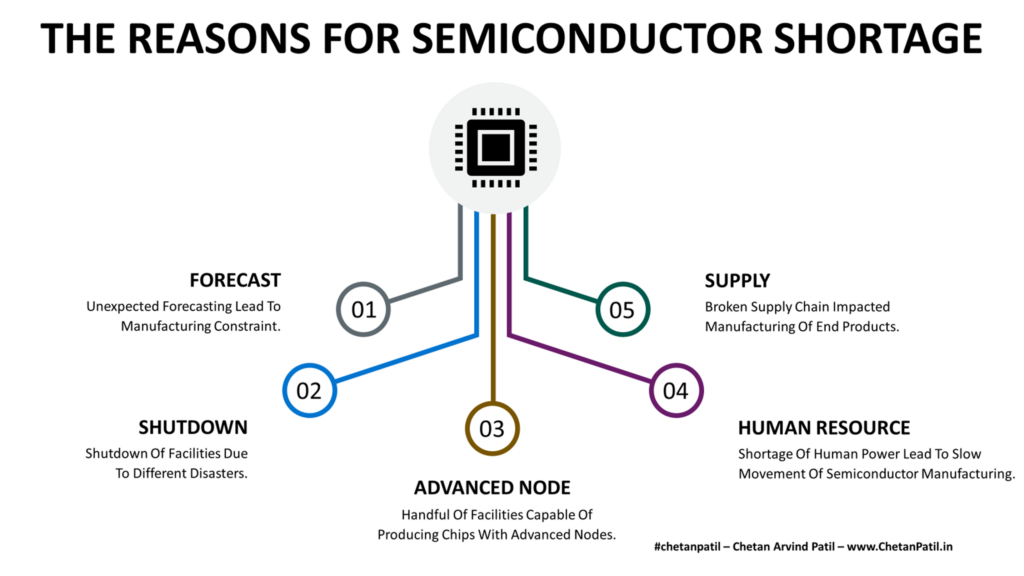

The semiconductor shortage has shown the world the importance of semiconductor FABs. This is the primary reason why all over the world countries are coming up with attractive incentives to invite the Pure-Play Foundries to set up new FABs. However, from the semiconductor manufacturing point of view, semiconductor FABs provide only 50% of the services that are necessary to turn a product design into reality. The rest of the 50% is dependent on the OSATs – Outsourced Semiconductor Assembly and Test.

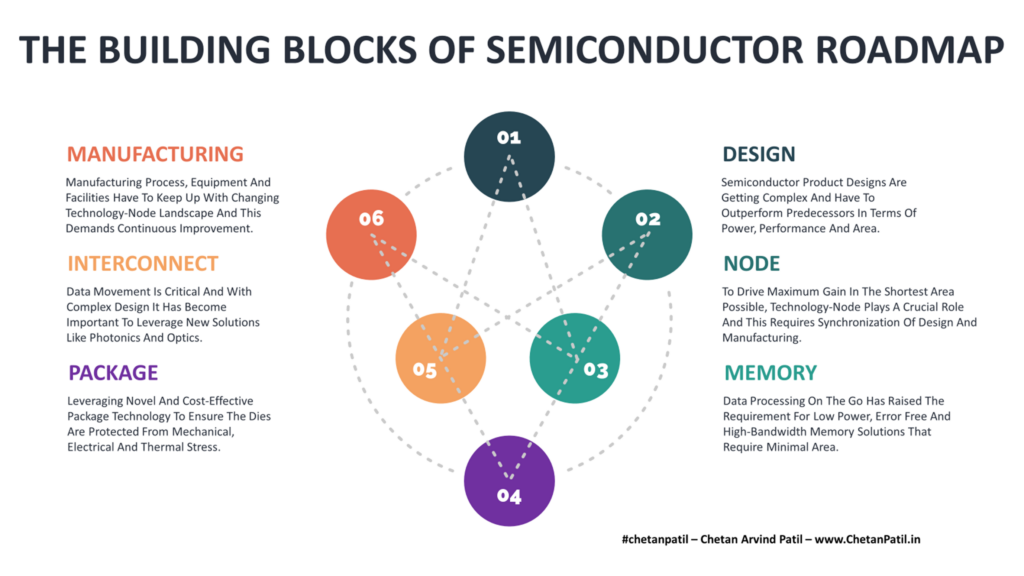

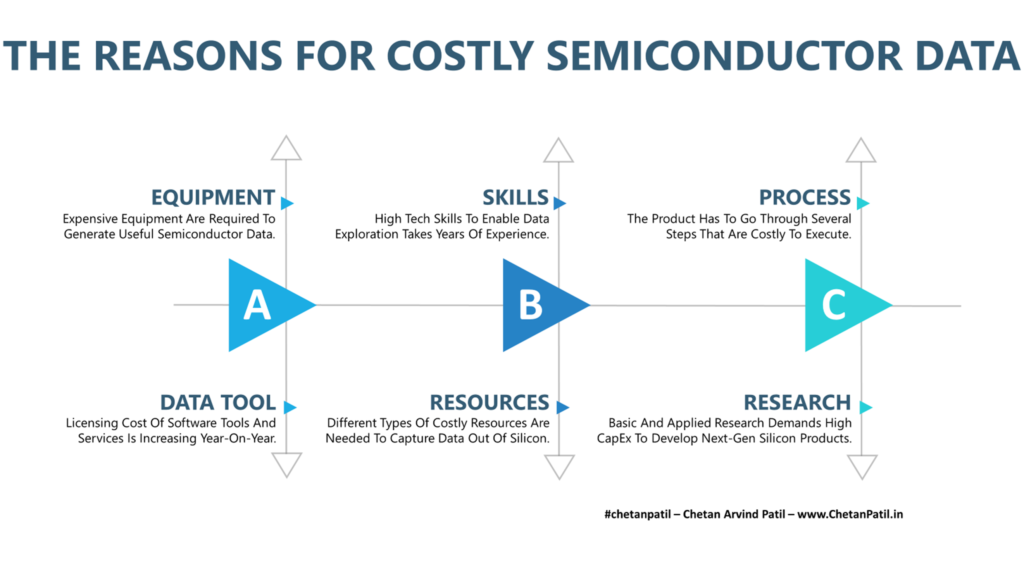

Test and packaging (also referred to as assembly) is a major part of semiconductor manufacturing, and these two are the services that OSATs provide. OSATs invest heavily in equipment and processes that enable testing of different types of wafers/parts apart from providing high-tech research-driven packaging solutions. The cost associated with running the testing and packaging process is the major reason why the majority of semiconductor companies are relying on outsourcing.

In doing so, over the last four decades several OSATs have come up all over the world. However, their growing importance is also the major reason why the semiconductor industry should also focus on OSATs when talking about building new manufacturing capacity and not just focus on semiconductor FABs that only provide half of the semiconductor product development process.

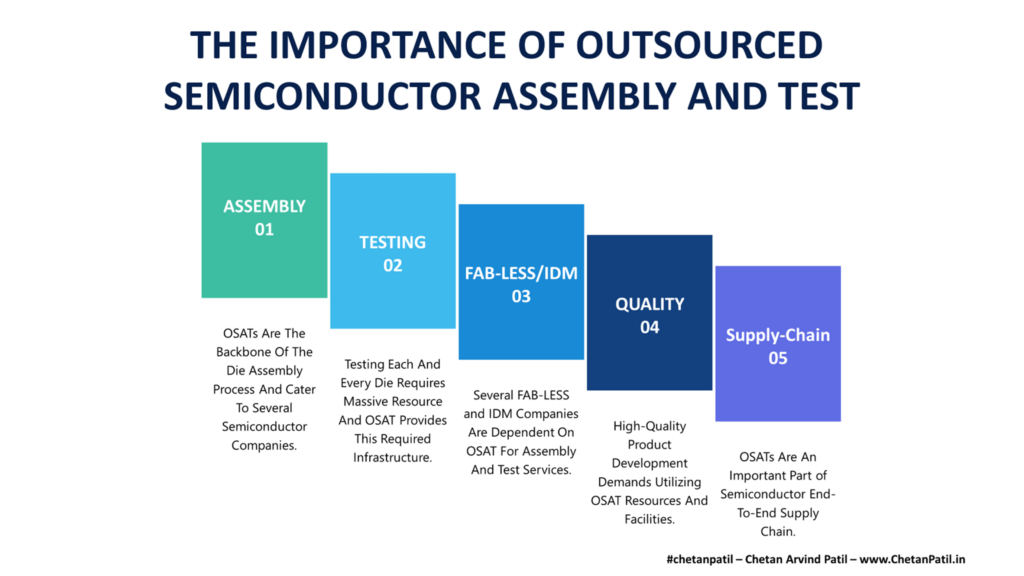

Several factors have made OSATs the backbone of the semiconductor industry. The semiconductor supply chain will be inefficient without OSAT houses because of the following importance:

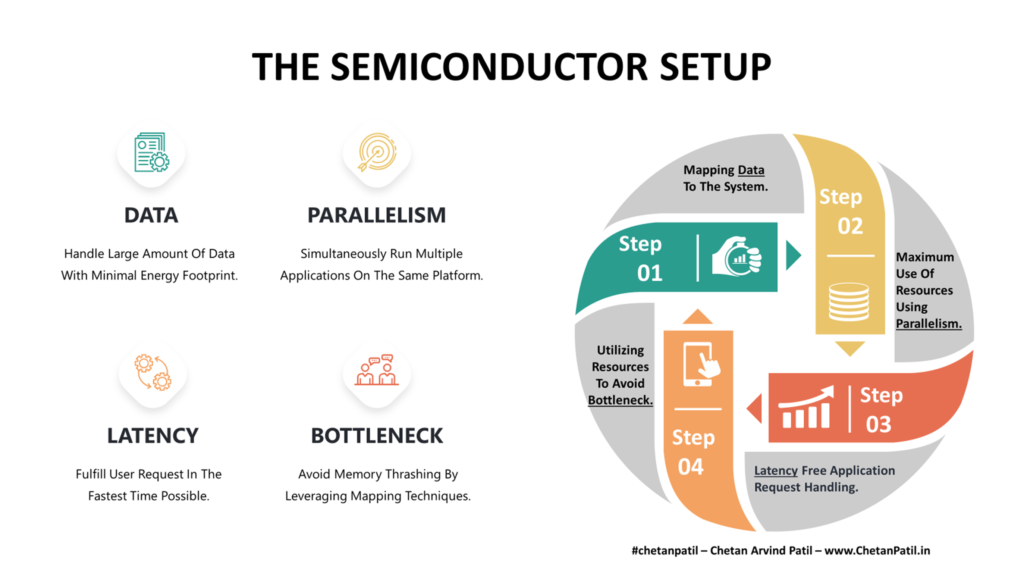

Assembly: Any piece of semiconductor die has to get packaged into an assembled product that can be soldered onto the target application platform. This is where OSATs come into play as their first area of focus is to drive assembly (by providing different package technology) services. OSATs invest heavily in research and development activities to provide different types of assembly options, and over the last few years, semiconductor design houses have also relied on OSAT to drive their assembly requirement.

Testing: Testing and assembly go hand in hand, and that is why OSATs by default provide testing services. These services require high-end equipment so that any type of wafer can be tested with minimal human interference. In many cases, testing is also carried out on the packaged parts and is a de-facto way to screen bad parts out of the assembly line.

FAB-LESS/IDM: In the semiconductor industry, not all companies have in-house manufacturing facilities. This is more applicable for FAB-LESS and some IDMs. These two types of companies thus leverage external FABs and OSATs to cater to their need for fabricating, testing, and assembly. This is another reason why OSATs have grown in importance as several FAB-LESS/IDM are dependent on them.

Quality: Several years of industrial experience have enabled OSATs to provide high-quality services that drive defect-free testing and assembly solutions. In the long run, OSATs ensure that the product being tested and assembled follows a robust recipe that allows them to remove any low-quality part out of the production line thus improving the quality of their customer’s product.

Supply-Chain: The end-to-end semiconductor flow requires several stakeholders to come together. This is where companies providing different services come into the picture. The design and fabrication houses are a major part of the supply chain. However, the testing and assembly requirement makes the semiconductor supply chain incomplete without OSATs. The outsourcing facilities provided by OSATs make them the last critical step in the semiconductor supply chain.

Even though there are several OSAT vendors in the market, only a few players are well known and have also expanded their business and reach over the last few decades. While this is certainly good news for the semiconductor growth, but slowly it is presenting a challenge similar to the semiconductor FABs, where a handful of players are driving the semiconductor back-end business. The same scenario is applicable in the OSAT arena, where few companies are increasing their market share and making the semiconductor supply chain dependent on them.

In the long run, this can prove out to be a costly scenario and that is why the semiconductor industry needs diverse players to provide semiconductor FABs and OSATs services. Today is the right time to do so as countries are looking to attract new manufacturing houses to set up shops and new players can leverage these incentives to create a niche market for themselves.

THE OPPORTUNITIES IN THE OUTSOURCED SEMICONDUCTOR ASSEMBLY AND TEST ARENA

The OSAT market share shows a similar story as the semiconductor FAB. There are three to four players in the OSAT arena that are dominating the market for several years, and year-on-year this gap is increasing when compared to other smaller OSAT players.

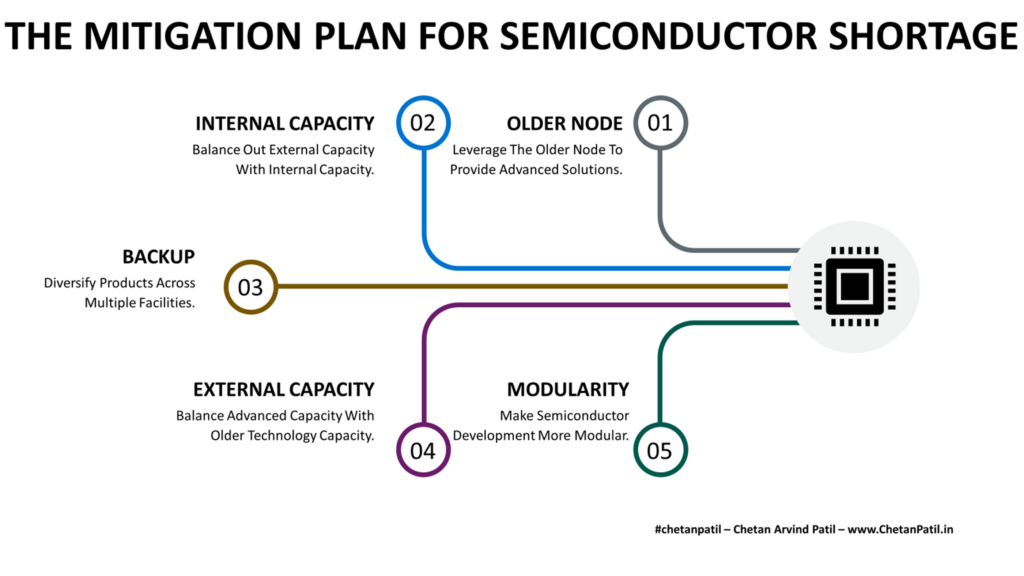

There is certainly nothing wrong if the big OSAT players are getting bigger. The problem arises when there is a spike in demand and the top players are not able to accommodate all the requests, which eventually leads to higher processing (test and assembly) time. In situations like these, the need for larger diversified OSAT capacity is felt.

The sudden rise in semiconductor demand has not only affected the semiconductor FABs but has certainly also affected OSATs. In some sense, this presents an opportunity for emerging OSATs, semiconductor investors, and also countries/governments to focus on OSAT business if the cost of developing new FABs is too high/risky.

OSATs can be an excellent vehicle for emerging semiconductor manufacturing regions as they require less investment compared to semiconductor FABs and on other hand, the revenue is attractive too. Focusing on OSAT capacity improvement can also drive growth in semiconductor manufacturing for countries that haven’t had the fortune of housing semiconductor FABs so far.

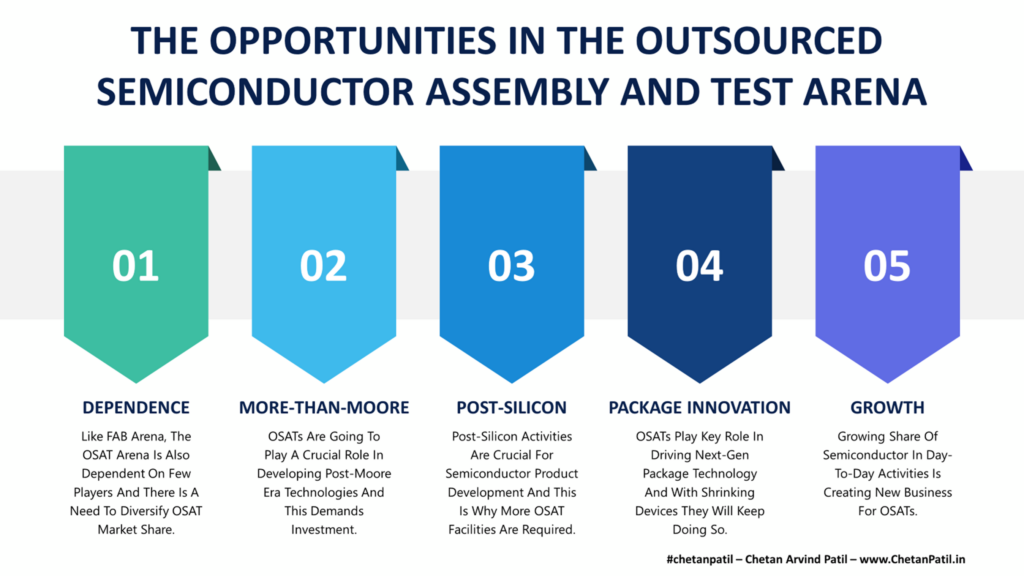

The opportunities presented by the OSAT business arena are many and are a good mix of business and technical dependency:

Dependence: When it comes to optimizing semiconductor operational activities, hiring OSATs to perform semiconductor testing and packaging is the most important decision. The growing dependence on OSAT has lead to the expansion of some of the top players and this is making FAB-LESS to IDMs dependent on few top OSAT houses. To balance this out (similar to what the semiconductor FAB market also needs) there is an opportunity for new emerging OSAT to provide more capacity to the semiconductor industry and this might ensure that there is no dependence on few select players.

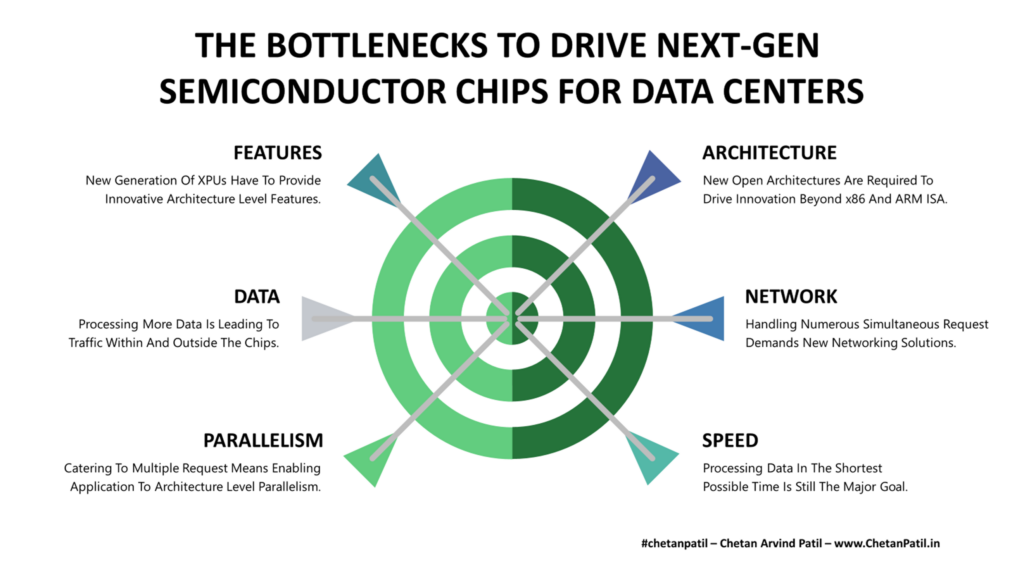

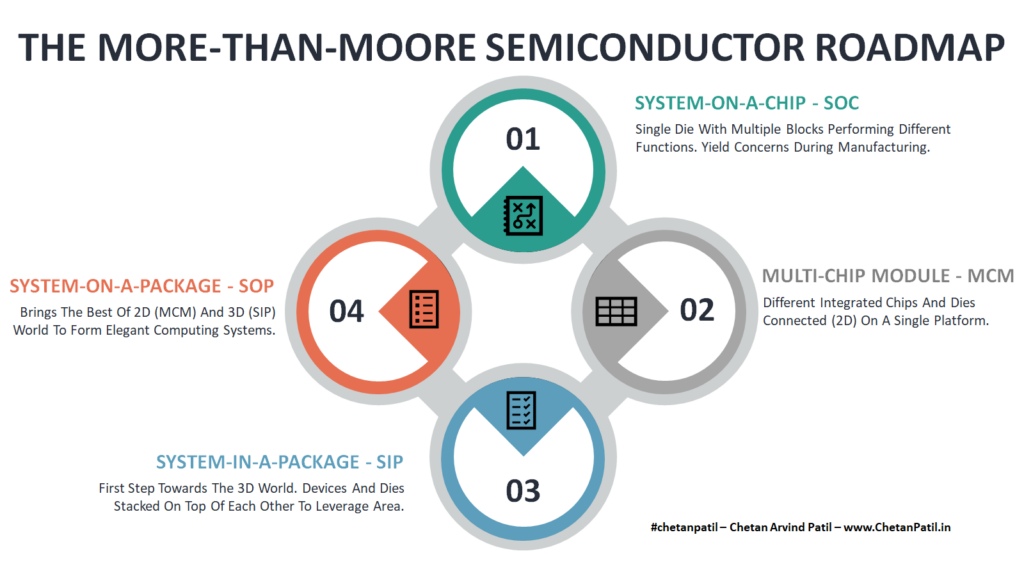

More-Than-Moore: As the world move beyond 1nm, the research around technologies that can drive solution beyond Moore’s law is also critical. OSATs have an important part to play, and the major reason is due to the different types of package technologies that can help drive next-gen semiconductor solutions like chiplets and heterogeneous integration.

Post-Silicon: More than 50% of the semiconductor product development activities occur during the post-silicon stage. From FABs to OSATs to ATMPs to Distributers, all play a critical role in bringing the design to life. As part of the post-silicon process, OSATs have increased their importance over the last decade. The complexity brought by the new chip design is also pushing OSATs to upgrade their facilities to handle the probing of new types of chips. This presents an opportunity not only to the OSAT market but also to the equipment and tool manufacturers.

Package Innovation: Innovative package solutions will be a continuous development process. FAB-LESS and other types of semiconductor design houses can come up with new packaging solutions, but they will always require an OSAT vendor to execute and bring the new package technology to reality. The major reason is the lack of internal or in-house assembly and testing facilities (which often require millions of dollars), and relying on OSAT is the best way to optimize the cost while driving new package innovations.

Growth: The increasing share of semiconductors in day-to-day solutions is putting a lot of pressure on semiconductor manufacturing. This is the major reason why for the next few years or even decades, the semiconductor market will keep growing. The heavy dependence on OSAT services makes them a perfect venture to be in, and also makes them a great candidate for countries looking to ignite semiconductor manufacturing clusters within their borders.

The importance of OSAT is well known in the semiconductor industry. They provide critical services by building larger facilities that can drive the last important piece of semiconductor manufacturing. This is why countries looking to attract semiconductor manufacturing houses should focus on OSATs and then build the semiconductor manufacturing infrastructure up to the FABs.

Ultimately, as the importance of manufacturing aspect of the semiconductor product development grows, the importance of both the FABs and OSATs will grow too.