Image Generated Using Nano Banana

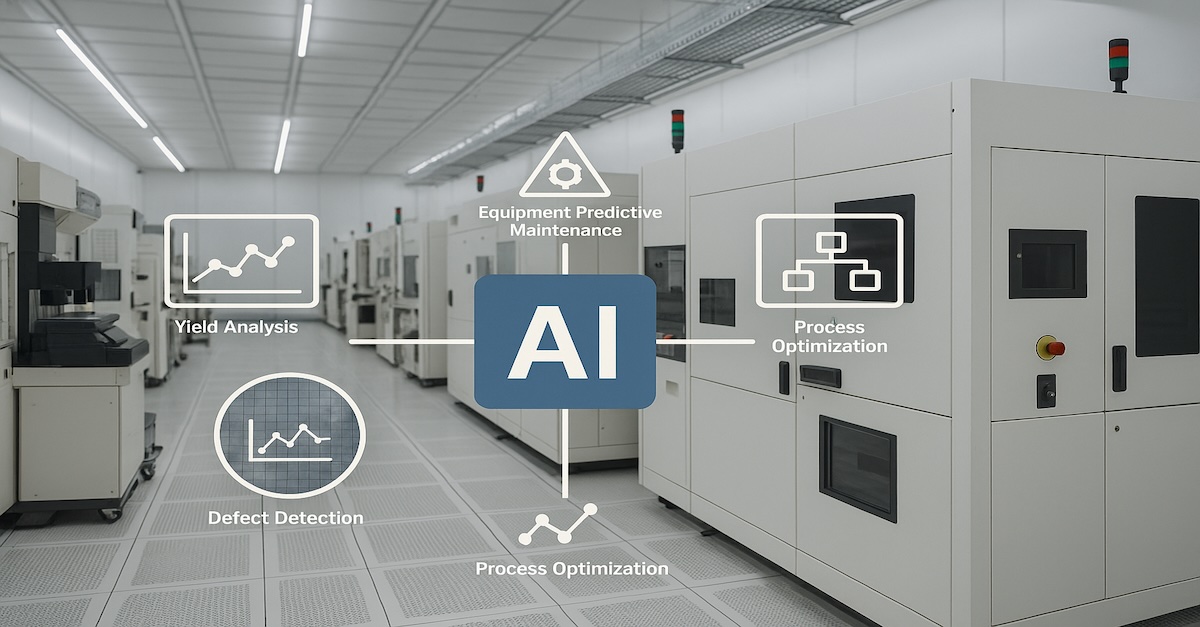

What Are Semiconductor Agents

Semiconductor Agents are AI model-driven assistants built to support the digital stages of chip development across design, verification, optimization, and analysis. Unlike traditional automation scripts or rule-based flows, these agents use large models trained on RTL, constraints, waveforms, logs, and tool interactions.

This gives them the ability to interpret engineering intent, reason about complex design states, and take autonomous actions across EDA workflows. In practical terms, they act as specialized digital coworkers that help engineers manage work that is too large, too repetitive, or too interconnected for manual execution.

In design, these agents can generate RTL scaffolds, build verification environments, explore architectural tradeoffs, analyze regression failures, and recommend PPA improvements. In verification, they generate tests, identify coverage gaps, diagnose failure signatures, and run multi-step debug sequences. In physical design, they assist with constraint tuning, congestion analysis, timing closure, and design space exploration by using model-driven reasoning to evaluate large option spaces much faster than human iteration.

Put simply, model-driven semiconductor agents are intelligent systems that accelerate, improve accuracy, and scale chip development. They convert slow, script-heavy engineering loops into guided, automated workflows, representing a significant shift in how modern silicon will be created.

Are These Agents Real Or Hype?

Model-driven semiconductor agents are no longer a future idea. They are already used in modern EDA platforms, where they automate tasks such as RTL generation, testbench creation, debug assistance, and design optimization.

These agents rely on large models trained on engineering data, tool interactions, and prior design patterns, which allows them to operate with a level of reasoning that simple scripts cannot match.

Academic research supports this progress. For example, one paper (“Proof2Silicon: Prompt Repair for Verified Code and Hardware Generation via Reinforcement Learning”) reports that using a reinforcement-learning guided prompt system improved formal verification success rates by up to 21% and achieved an end-to-end hardware synthesis success rate of 72%.

another study (“ASIC‑Agent: An Autonomous Multi‑Agent System for ASIC Design with Benchmark Evaluation”) the authors introduce a sandboxed agent architecture that spans RTL generation, verification, and chip integration, demonstrating meaningful workflow acceleration.

These research-driven examples show that model-driven and agent-based methods are moving beyond concept toward applied results in chip design.

It is still early, and no single agent can design a full chip. Human engineers guide decisions, verify results, and manage architectural intent. But the momentum is real. Model-driven semiconductor agents are practical, maturing quickly, and steadily becoming an essential part of how the industry will design and verify chips at scale.

How Semiconductor Agents Integrate Into the Silicon Lifecycle

In early design exploration, a semiconductor agent could take a natural-language module description and generate an initial RTL draft along with interface definitions and bare assertions. Engineers would then refine the output instead of starting from a blank file. This reduces time spent on boilerplate RTL and allows teams to explore architectural directions more quickly and with less friction.

During verification, an agent could analyze regression results, classify failures based on patterns in signals and logs, and propose a minimal reproduction test. This turns hours of manual waveform inspection into a short, actionable summary. Engineers receive clear guidance on where a failure originated and why it may be happening, which shortens debug cycles and helps verification progress more consistently.

| Stage of Lifecycle | Possible Agent Use Case | What The Agent Can Do | Value to Engineering Teams |

|---|---|---|---|

| Design | RTL Draft Generation | Converts written specifications into initial RTL scaffolds and interface definitions | Faster architecture exploration and reduced boilerplate coding |

| Design | Constraint & Architecture Suggestions | Analyzes goals and proposes timing, power, or area tradeoff options | Helps evaluate design alternatives quickly |

| Verification | Automated Testbench Generation | Builds UVM components, assertions, and directed tests from module descriptions | Reduces manual setup time and accelerates early verification |

| Verification | Regression Triage & Pattern Detection | Classifies failures, identifies recurring issues, and recommends likely root causes | Compresses debug cycles and improves coverage closure |

| Physical Design | PPA Exploration | Evaluates multiple constraint and floorplan options using model reasoning | Narrows the search space and speeds up timing closure |

| Physical Design | Congestion & Timing Analysis | Predicts hotspots or slack bottlenecks and suggests candidate fixes | Reduces the number of full P&R iterations |

| Signoff | Intelligent Rule Checking | Identifies high-risk areas in timing, IR drop, or design-for-test based on learned patterns | Helps engineers prioritize review efforts |

| Product Engineering | Anomaly Detection in Pre-Silicon Data | Analyzes logs, waveform summaries, or DFT patterns to detect inconsistencies | Improves first-silicon success probability |

| System Bring-Up | Issue Localization | Interprets bring-up logs and suggests potential firmware or hardware mismatches | Shortens early debug during lab validation |

In physical design, an agent could evaluate many constraints and floorplan variations using model-driven reasoning. By analyzing congestion signatures, timing slack, and area tradeoffs, it could narrow the design space to a few strong candidates. Engineers would then focus on validating these options rather than manually exploring hundreds of combinations, thereby improving both the speed and the quality of timing closure.

Who Is Building Semiconductor Agents And What It Takes

EDA vendors and a new generation of AI-EDA startups are primarily developing semiconductor agents. Established tool providers are adding large models into their design and verification platforms, while startups are building agent-first workflows for RTL, verification, and debug. These systems sit on top of existing EDA engines and aim to reduce repetitive engineering work.

Building these agents requires deep domain data and strong tool integration. Helpful agents depend on RTL datasets, constraints, logs, waveforms, and optimization traces. They also need alignment layers that help the model understand engineering intent and connect reliably to commercial EDA tools, enabling execution of multi-step flows.

| Category | Who Is Building Them | What They Contribute | What It Takes to Build Agents |

|---|---|---|---|

| EDA Vendors | Established design-tool providers | Agent-assisted RTL, verification, debug | Large datasets, tight EDA integration, safety guardrails |

| AI-EDA Startups | Model-focused EDA companies | Multi-agent workflows and rapid innovation | Proprietary models and close customer iteration |

| Semiconductor Companies | Internal CAD and design teams | Real data and domain expertise | Access to RTL, ECO histories, regressions, waveforms |

| Academic Labs | Universities and research centers | New multi-agent methods and algorithms | Research datasets and algorithm development |

Trust and correctness are central to building these agents. Because chip design errors are costly, teams need guardrails, human oversight, and verifiable outputs. Agents must behave predictably and avoid changes that violate timing, physical, or functional rules.

In summary, semiconductor agents are being built by organizations with the correct data, EDA expertise, and safety practices. Creating them requires large models, strong domain alignment, and deep integration with existing tools, and these foundations are now driving their rapid adoption.