Image Generated Using DALL-E

Understanding LLM And AI Agents

LLM-based models like GPT have been developed to comprehend, create, and interact with human language on a large scale. Trained on extensive text data, these models can produce coherent and contextually relevant information, making them valuable across various applications.

LLMs’ full potential is realized when integrated into AI Agents. These Agents, functioning as independent entities, are capable of perceiving their environment, making decisions, and taking action. They function like intelligent assistants, capable of interpreting complex inputs, making informed decisions, and carrying out tasks with minimal human intervention.

When it comes to semiconductor production, LLM-based AI Agents stand out as a unique opportunity to simplify traditionally intricate and highly specialized processes. Their advanced language understanding and decision-making capabilities can be harnessed to optimize operations, reduce errors, and foster innovation in ways that were previously inconceivable. This unique set of features underscores the potential impact these agents can have in the semiconductor industry.

So, how exactly can LLM AI Agents revolutionize semiconductor production? Let us explore.

How LLM AI Agents Can Enhance Semiconductor Production

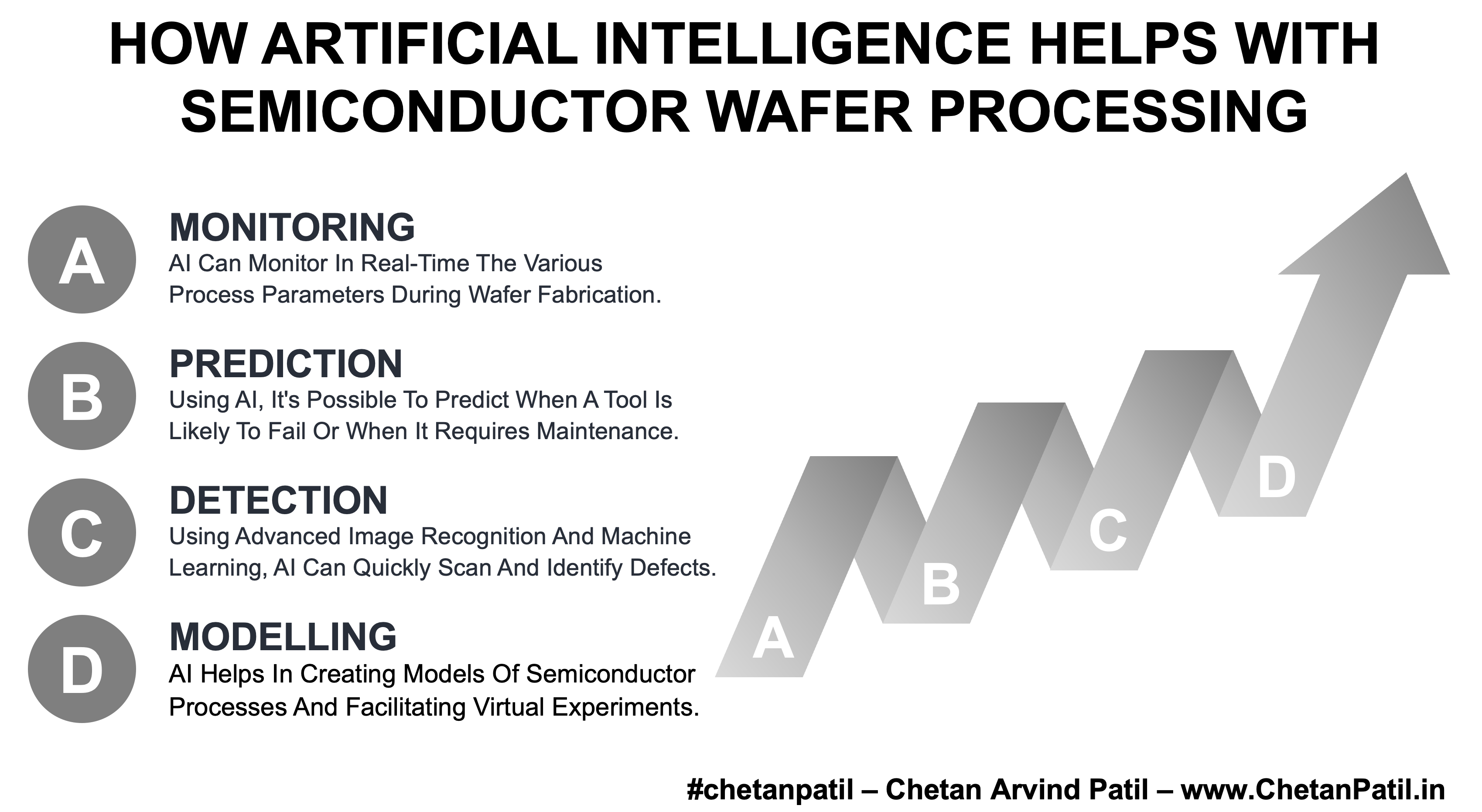

In the high-stakes world of semiconductor manufacturing, where precision is paramount and costs are high, LLM AI Agents stand out as a viable and promising solution. Their unique features, such as real-time monitoring, advanced data analytics, and predictive maintenance, offer a distinct advantage. These agents can significantly enhance efficiency, improve quality, and streamline production, making them valuable to any semiconductor production line.

LLM AI Agents can potentially revolutionize semiconductor production through proactive process optimization. While these agents are already showing promise in various industries, their full impact on semiconductor manufacturing is still emerging.

The vision is clear: LLM AI Agents could go beyond merely monitoring manufacturing equipment in real-time – they could predict when maintenance might be needed long before any visible issues arise. By leveraging advanced data analytics to detect even the slightest deviations from optimal performance, these agents could help ensure that production lines run smoothly and efficiently, minimizing unplanned downtime and preventing costly disruptions.

While still being refined and tested, this predictive maintenance concept holds great promise. If fully realized, it could drastically reduce the frequency of unexpected equipment failures, leading to more consistent and reliable production. But the possibilities do not end there. In theory, LLM AI Agents could also dynamically adjust production parameters on the fly, responding to real-time data to optimize processes in previously unimaginable ways.

For instance, imagine a scenario where a slight change in a deposition process could improve yield. With its advanced analytical capabilities, an LLM AI Agent could identify this opportunity and implement the change immediately, optimizing the process in real time. This would lead to reduced waste, lower costs, and higher throughput – all while maintaining the stringent quality standards that the semiconductor industry demands.

While these scenarios represent exciting possibilities, it is essential to recognize that they are primarily forward-looking. The full implementation of such capabilities is still on the horizon as researchers and engineers continue to explore and refine how LLM AI Agents can be most effectively integrated into these complex processes.

LLM Potential Use Cases In Semiconductor Manufacturing

LLM AI Agents could offer various other benefits in semiconductor manufacturing. These include enhancing quality control, optimizing supply chain management, and streamlining design processes. In each of these areas, the advanced capabilities of LLM AI Agents – such as pattern recognition, predictive analytics, and decision-making – could introduce new levels of intelligence and efficiency, paving the way for future innovations.

Below is an overview of how LLM AI Agents might impact different aspects of semiconductor production if fully realized.

| Use Case | Description | Benefits |

|---|---|---|

| Process Optimization | Real-time monitoring and adjustment of manufacturing processes. | Reduced downtime, lower costs, higher throughput. |

| Quality Control | Early detection of defects by analyzing production data at various stages. | Improved yield, reduced rework, minimized waste. |

| Yield Enhancement | Analyzing data to identify factors affecting yield and implementing corrective actions. | Higher production yields, increased profitability. |

| Test Optimization | Automating and optimizing test procedures, reducing test times while maintaining accuracy. | Faster time-to-market, reduced testing costs. |

| Material Optimization | Predicting material performance and optimizing material usage based on historical data. | Efficient material use, cost reduction, improved product quality. |

| Metrology And Inspection | Enhancing metrology techniques by predicting measurement errors and optimizing calibration. | Increased accuracy in measurements, reduced inspection times. |

| Packaging And Assembly | Streamlining the packaging and assembly processes by optimizing workflows and detecting anomalies. | Improved reliability, reduced defects, enhanced assembly efficiency. |

| Substrate Management | Monitoring and optimizing substrate production and handling processes. | Improved substrate quality, reduced waste, lower production costs. |

| Device Modeling | Assisting in device modeling by optimizing simulation parameters and predicting performance issues. | Faster design iterations, improved device performance, reduced modeling errors. |

| Supply Chain Management | Predicting bottlenecks, optimizing inventory, and sourcing alternative suppliers. | Seamless operations, reduced disruptions, cost savings. |

These use cases illustrate how LLM AI Agents can touch every aspect of semiconductor manufacturing, from the early stages of material selection to the final steps of packaging and assembly.

By integrating these agents into their operations, manufacturers can unlock new levels of precision, efficiency, and innovation, setting the stage for a future where semiconductor production is more innovative, faster, and more reliable.

Takeaway: Embracing The Future With LLM AI Agents

As the demand for smaller, faster, and more efficient semiconductor devices intensifies, LLM AI Agents emerge as a transformative force to meet these challenges. These agents are not just automation tools but intelligent partners that bring new insight and capability to semiconductor manufacturing.

By integrating LLM AI Agents, semiconductor manufacturers can optimize almost all the stages of semiconductor production, from process control and yield enhancement to supply chain management and device modeling, potentially achieving higher yields, lower costs, reduced waste, and faster time-to-market.

What truly sets LLM AI Agents apart is their adaptability and continuous learning. Unlike traditional systems, these agents evolve with your processes, constantly improving and adapting to new challenges. This makes them a short-term solution and a long-term strategic asset.

As the pace of innovation accelerates, those who embrace LLM AI Agents will gain a competitive edge, ensuring they remain at the forefront of the industry. The future of semiconductor manufacturing is not just about keeping up; it’s about leading the way with more innovative, faster, and more efficient operations powered by LLM AI Agents.