Photo by Google DeepMind on Unsplash

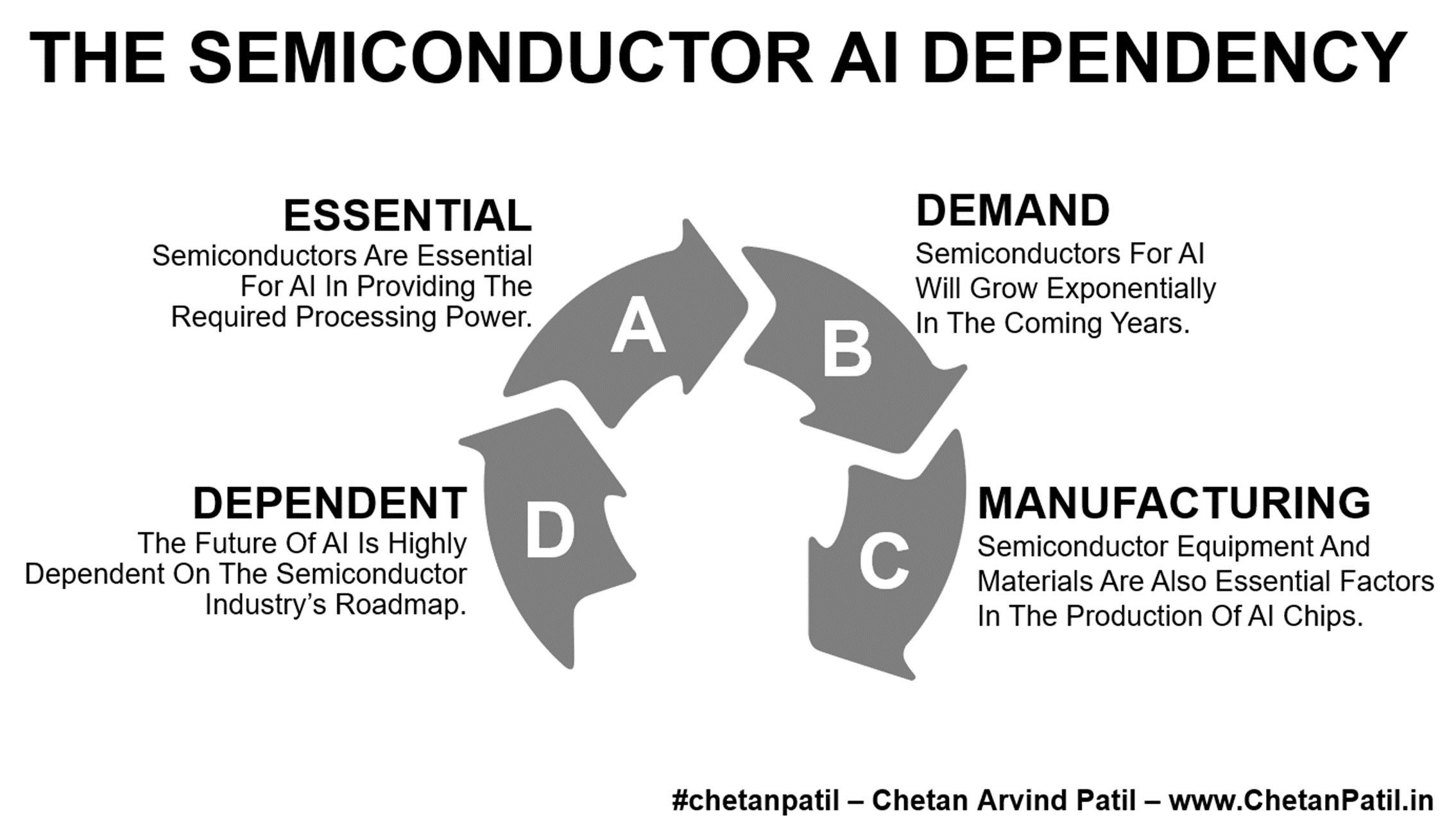

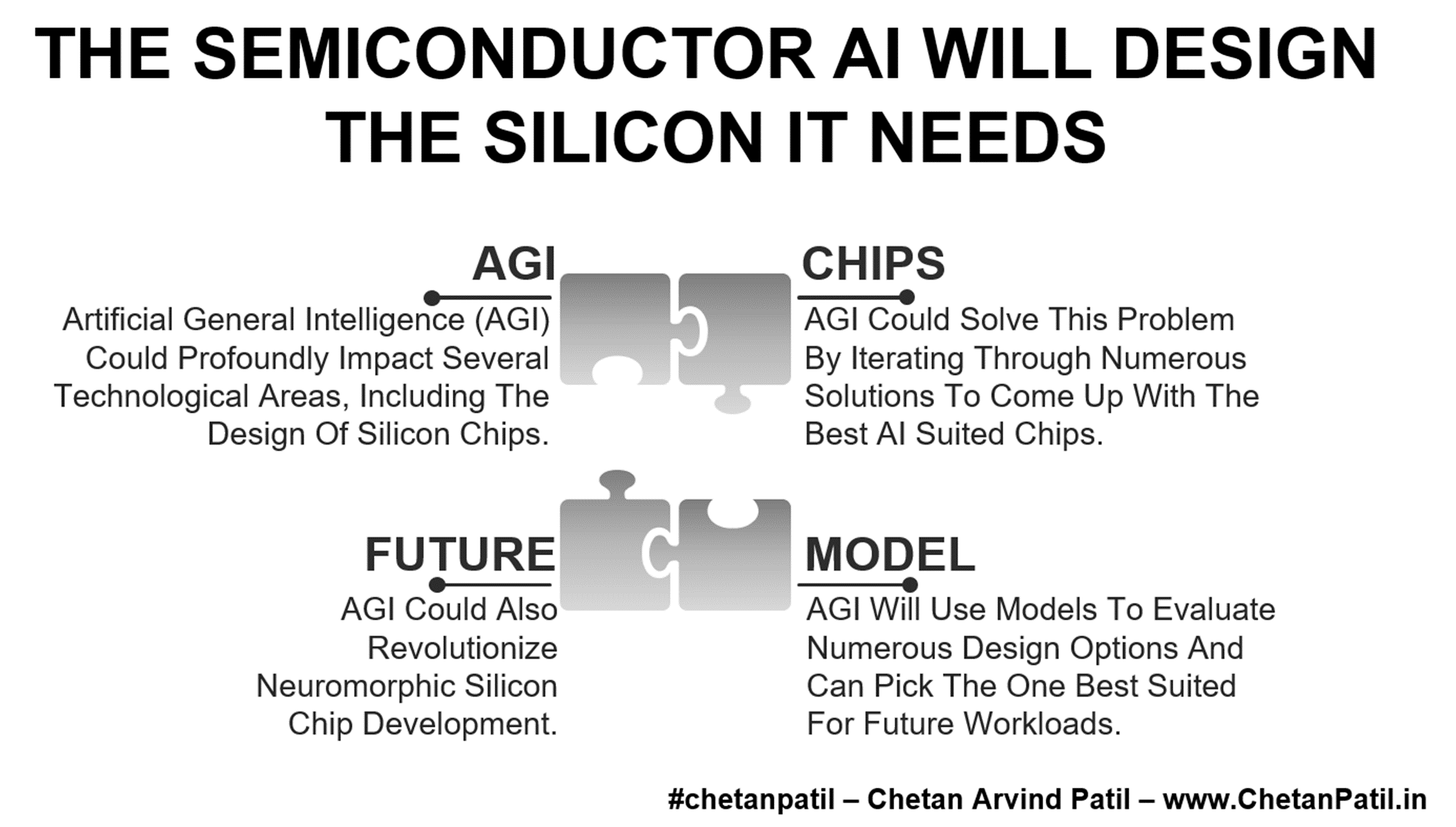

Artificial General Intelligence (AGI) is a hypothetical type of artificial intelligence that can understand or learn any intellectual task a human can. AGI is still a long way off, but if achieved, it could profoundly impact several technological areas, including the design of silicon chips used for AI applications.

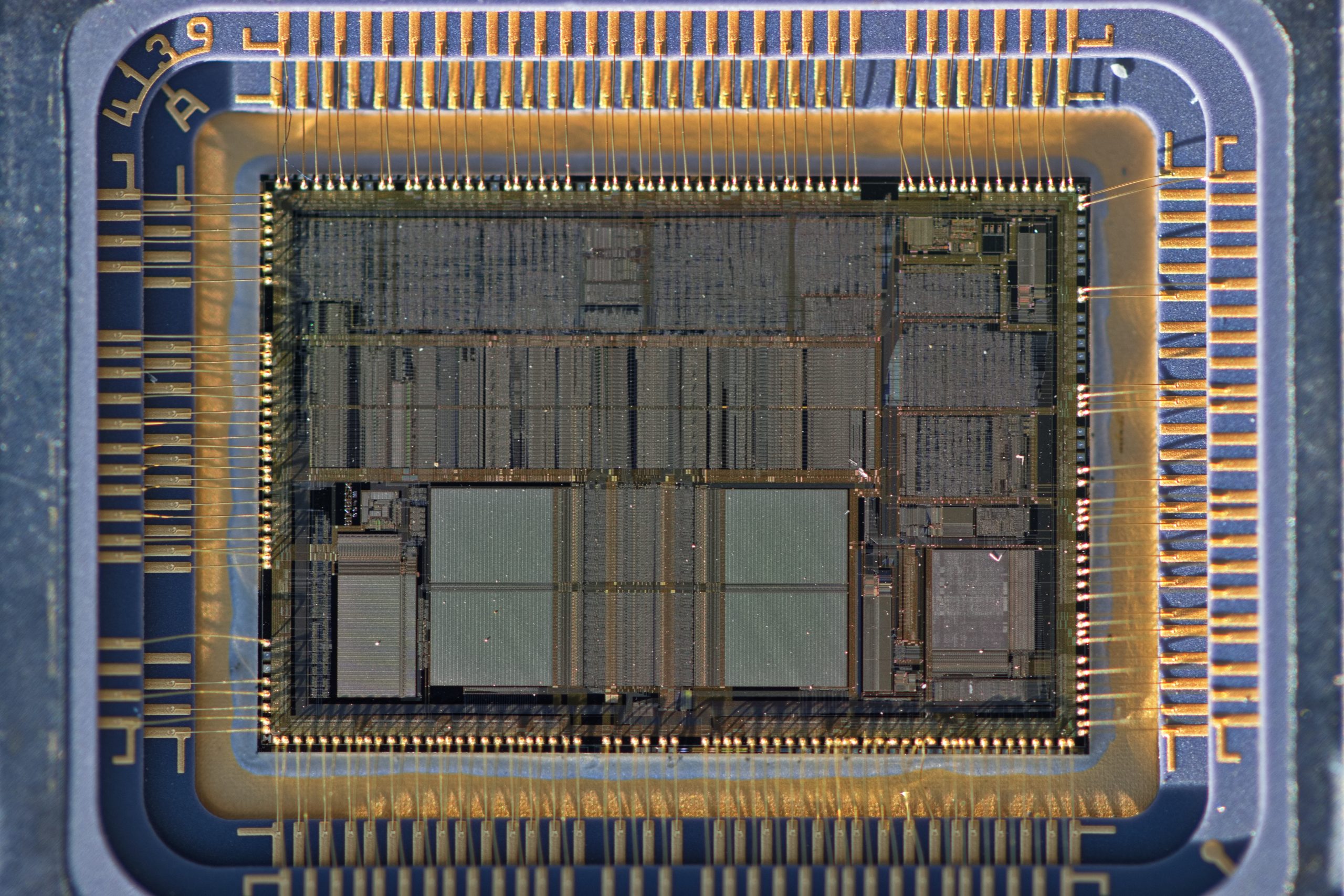

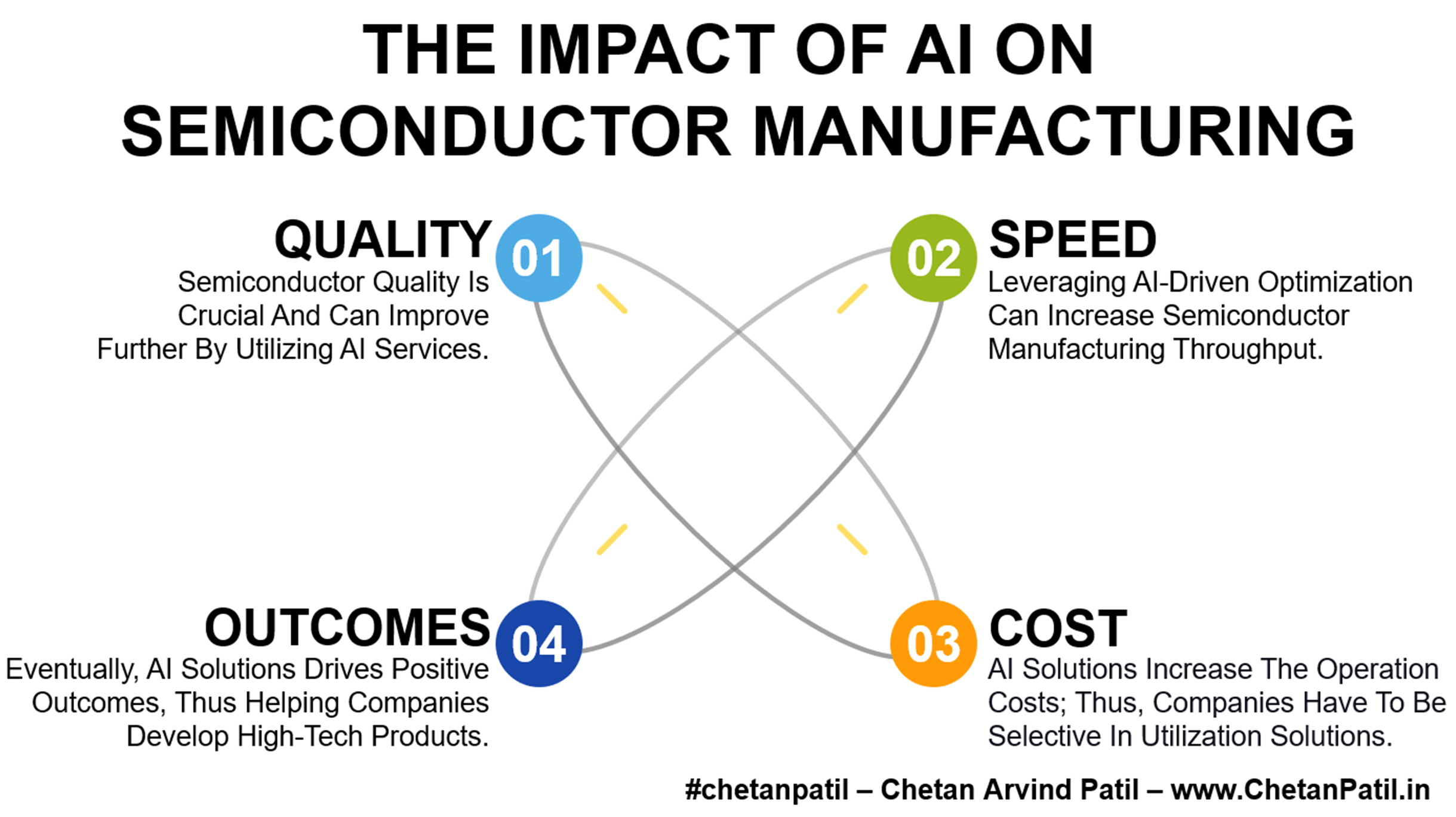

One of the biggest challenges in designing silicon chips is balancing performance with power consumption, apart from enabling a set of blocks to process and manage the workload without slowing down the system. As chips become more powerful, they also become more power-hungry. AGI could solve this problem by identifying the bottlenecks in current silicon chip designs across numerous fragments and their workloads. AGI could then use this information to develop unique design architectures that are more efficient and power-friendly.

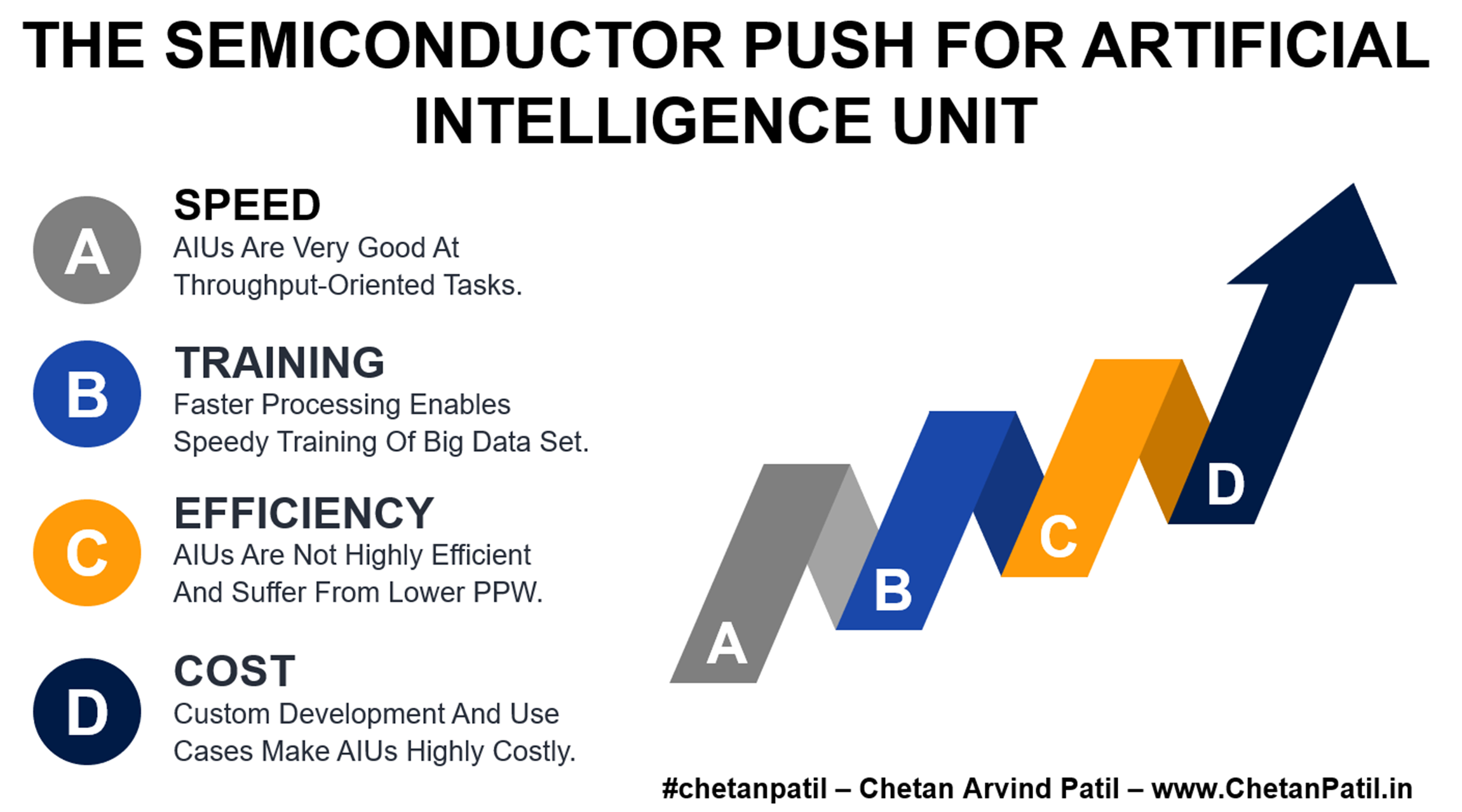

AGI: Artificial General Intelligence (AGI) Is Still A Long Way Off. If Achieved, It Could Profoundly Impact Several Technological Areas, Including The Design Of Silicon Chips.

Chips: AGI Could Solve This Problem By Iterating Through Numerous Solutions To Come Up With The Best AI Suited Chips.

In addition to improving performance and power efficiency, AGI could also help design silicon chips tailored explicitly for AI applications. For example, AGI could design chips better at processing large amounts of data or more efficient at learning new patterns. It will be able to do so due to capturing the ins and outs of how different workloads have performed. Currently, this task is very manual, and with AGI, it is possible to speed up the analysis flow tremendously.

Another example: AGI might identify that the chip’s memory bandwidth is a bottleneck. It means the chip cannot access its memory fast enough (compared to the expectation) to meet the application’s demands. AGI would then use this information to develop a new design architecture that improves the chip’s memory bandwidth.

AGI will use data and modeling to design next-gen AI silicon chips in several ways. First, AGI will capture data from numerous existing chips to identify the commonality in lack of performance. This data will include information about the types of AI applications, the amount of data these applications need to process, and the available power budget.

Second, AGI will use models to predict the performance of different chip architectures. These models will be per the AGI’s data from current chips. AGI will use these models to evaluate other design options and choose the one most likely to meet the performance requirements of future chips.

Model: AGI Will Use Models To Evaluate Numerous Design Options And Can Pick The One Best Suited For Future Workloads.

Future: AGI Could Also Revolutionize Neuromorphic Silicon Chip Development.

Finally, AGI will combine data and models to optimize the design of next-gen AI silicon chips. This optimization will include factors such as the chip’s architecture, the size of its transistors, and the materials used to build it. AGI will also use its knowledge of physics and chemistry to optimize the chip’s design for performance, power efficiency, and scalability.

The development of AGI for silicon chips is still in its early stages, with few examples of designing chips on its own. However, it has the potential to revolutionize the way that we design and build silicon chips. By working together, AGI and neuromorphic silicon chips could create a new generation that is more powerful, efficient, and scalable than possible.