Image Generated Using DALL·E

What Is Silicon-Aware Architecture

As chips approach atomic dimensions, every region of silicon begins to behave differently, shaped by fluctuations in voltage, temperature, stress, and delay. Traditional design methods still rely on fixed timing corners and conservative power margins, assuming stable and predictable behavior.

At three nanometers and below, this assumption breaks down. Modern workloads in artificial intelligence, edge computing, and automotive systems operate under constantly changing physical and electrical conditions. To sustain both performance and reliability, silicon must evolve beyond precision into perception. It must know its own state and react intelligently to it.

A silicon-aware architecture is the structural basis for this evolution.

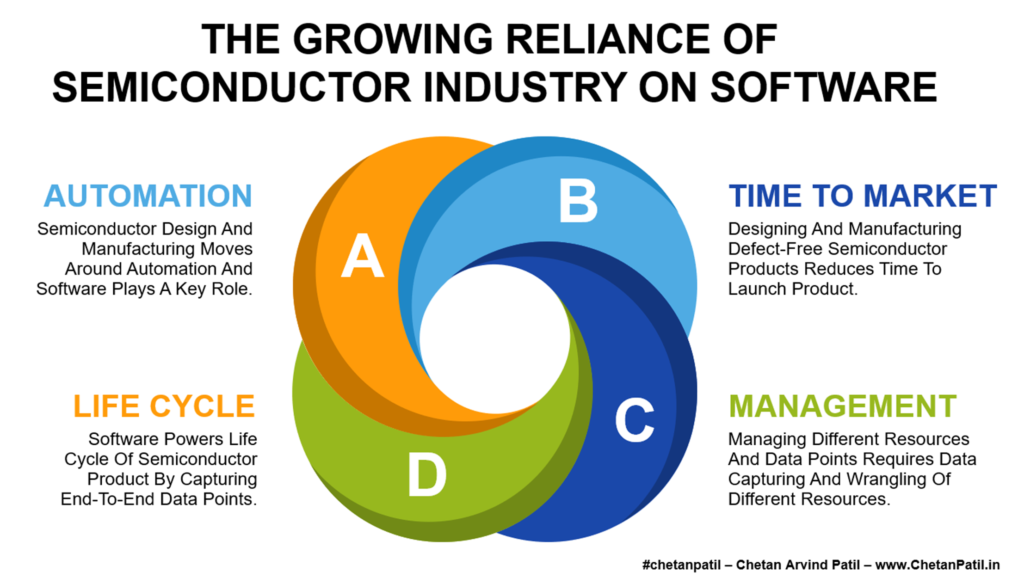

It represents a chip that not only executes logic but also perceives its own electrical and physical behavior in real time. Embedded networks of sensors, telemetry circuits, and adaptive control logic create continuous feedback.

The chip measures temperature, voltage, and aging, interprets the data internally, and fine-tunes its operation to maintain stability and efficiency. In doing so, the silicon transforms from a passive substrate into an active, self-regulating system capable of sustaining peak performance under diverse and unpredictable workloads.

Adapting To Workload Reality

Artificial intelligence workloads have redefined how silicon is stressed, powered, and utilized. Unlike conventional compute tasks that operate within predictable instruction flows, AI inference and training involve highly dynamic activity patterns. Cores experience extreme bursts of power consumption, rapid switching between memory and logic, and localized thermal buildup.

These workloads create transient peaks in current density that can exceed traditional design margins by several times. A static chip designed with fixed voltage and frequency limits cannot efficiently manage such fluctuations without wasting energy or compromising reliability.

| Adaptive Function | Challenge In AI Workloads | Traditional Limitation | Silicon-Aware Advantage |

|---|---|---|---|

| Thermal Regulation | Localized hotspots in dense compute clusters | Global throttling reduces overall throughput | Localized sensing and targeted bias control |

| Power Delivery | Rapid current surges during tensor operations | Static voltage rails with limited response | On-die regulation based on real-time telemetry |

| Reliability Aging | High stress cycles on interconnects and transistors | Static lifetime derating | Predictive control extending operational lifetime |

| Workload Distribution | Uneven utilization across cores | Coarse scheduling by firmware | Autonomous, per-region load balancing |

A silicon-aware architecture introduces a path forward by allowing the chip to interpret its own activity and respond within microseconds.

Through embedded sensing networks, the chip continuously monitors voltage drop, temperature gradients, and switching density. This information feeds local control loops that modulate power delivery, clock speed, or logic bias according to instantaneous demand.

For AI accelerators and heterogeneous SoCs, this means that compute islands can self-balance, with one region throttling while another ramps up, maintaining efficiency without intervention from system software.

In effect, silicon awareness enables the chip to become an adaptive substrate. Instead of relying on external management firmware to react after performance loss, the chip learns to anticipate workload transitions and adjust preemptively.

This is particularly vital in AI systems operating near thermal and electrical limits, where efficiency depends not only on algorithmic intelligence but also on the chip’s ability to interpret its own physical state in real time.

Barriers For Silicon-Aware Architecture

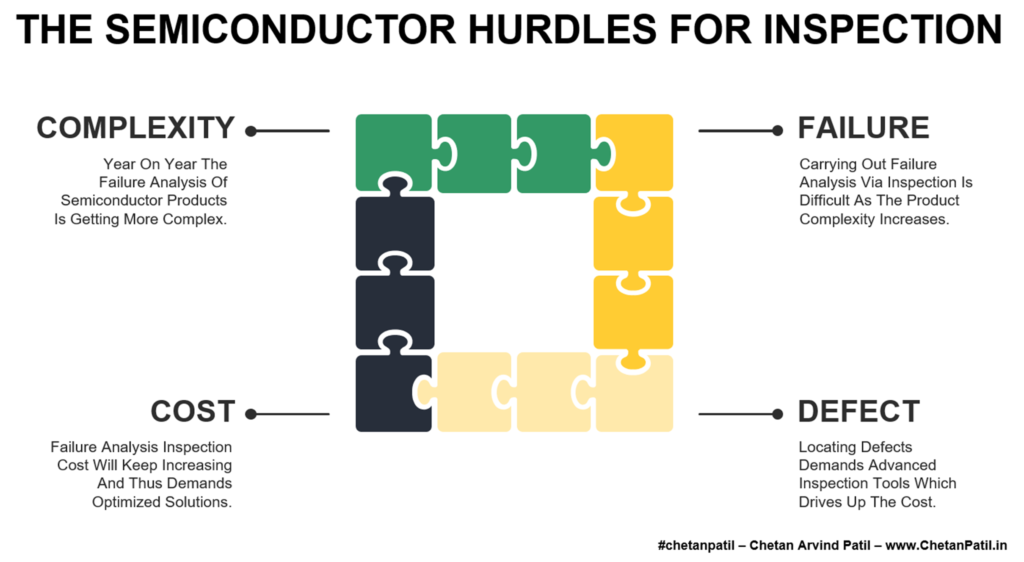

The vision of silicon-aware architecture is compelling, but achieving it introduces significant design and manufacturing challenges. Embedding intelligence into the wafer adds power, area, and verification overhead that can offset the performance gains it seeks to deliver.

The first barrier is integration overhead. Thousands of on-die sensors and control loops must fit within already congested layouts. Each additional circuit increases parasitic load and consumes power, limiting scalability.

The second is data complexity. Continuous telemetry from large SoCs produces massive data volumes. Without localized analytics, monitoring becomes inefficient and costly.

A third is trust and validation. Adaptive behavior complicates deterministic verification and safety certification. Establishing reliability for self-adjusting chips requires new design and test methodologies.

Overcoming these challenges will require tighter co-design between architecture, EDA tools, and foundry process technology.

Can True Self-Awareness Be Achieved

Accurate self-awareness in silicon is an ambitious goal, yet the path toward it is already visible.

Current SoCs employ distributed sensors, adaptive voltage scaling, and machine learning–assisted design tools that enable limited self-monitoring and optimization. These early steps show that awareness is not theoretical but a gradual evolution built through necessity. Each generation of chips adds more autonomy, allowing them to measure, interpret, and respond to internal conditions without human control.

Achieving full awareness will require chips that can learn from their own operating history and refine their behavior over time. Future architectures will merge sensing, inference, and adaptation at the transistor level, supported by AI-driven design and real-time feedback from the field.

The result will be silicon that maintains its performance, predicts degradation, and evolves throughout its lifetime, marking the shift from engineered precision to actual cognitive matter.