Image Generated Using 4o

The semiconductor industry is at a turning point. For decades, Moore’s Law offered a clear roadmap for progress: double the transistor count, boost performance, and drive costs down.

That predictability is fading as both computing and semiconductor industry approaches physical and economic limits, forcing engineers, designers, and manufacturers to explore entirely new paths forward.

In this new era, success depends on more than just clever design. It requires rethinking architectures around data movement, embedding intelligence into manufacturing, and building roadmaps that tightly connect design choices with yield outcomes.

Let us explore how these shifts are reshaping the industry and setting the stage for the next generation of computing.

Emergence Of Post-Moore Computing Paradigms

For years, Moore’s Law, predicting the doubling of transistors every couple of years, was the North Star guiding performance improvements. It provided a clear sense of direction: keep shrinking transistors, pack more onto a chip, and performance will keep improving. But as semiconductor industry approach physical limits, that predictable march forward has slowed. Manufacturing costs are soaring, quantum effects are creeping in at the most minor scales, and simply making transistors smaller is no longer the whole answer.

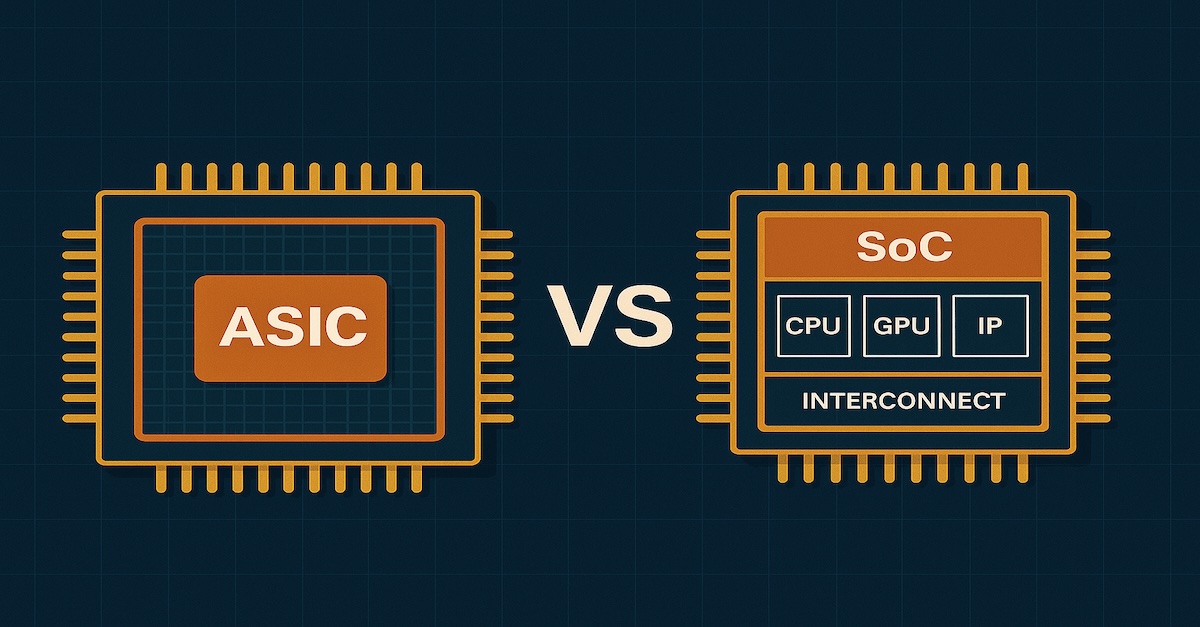

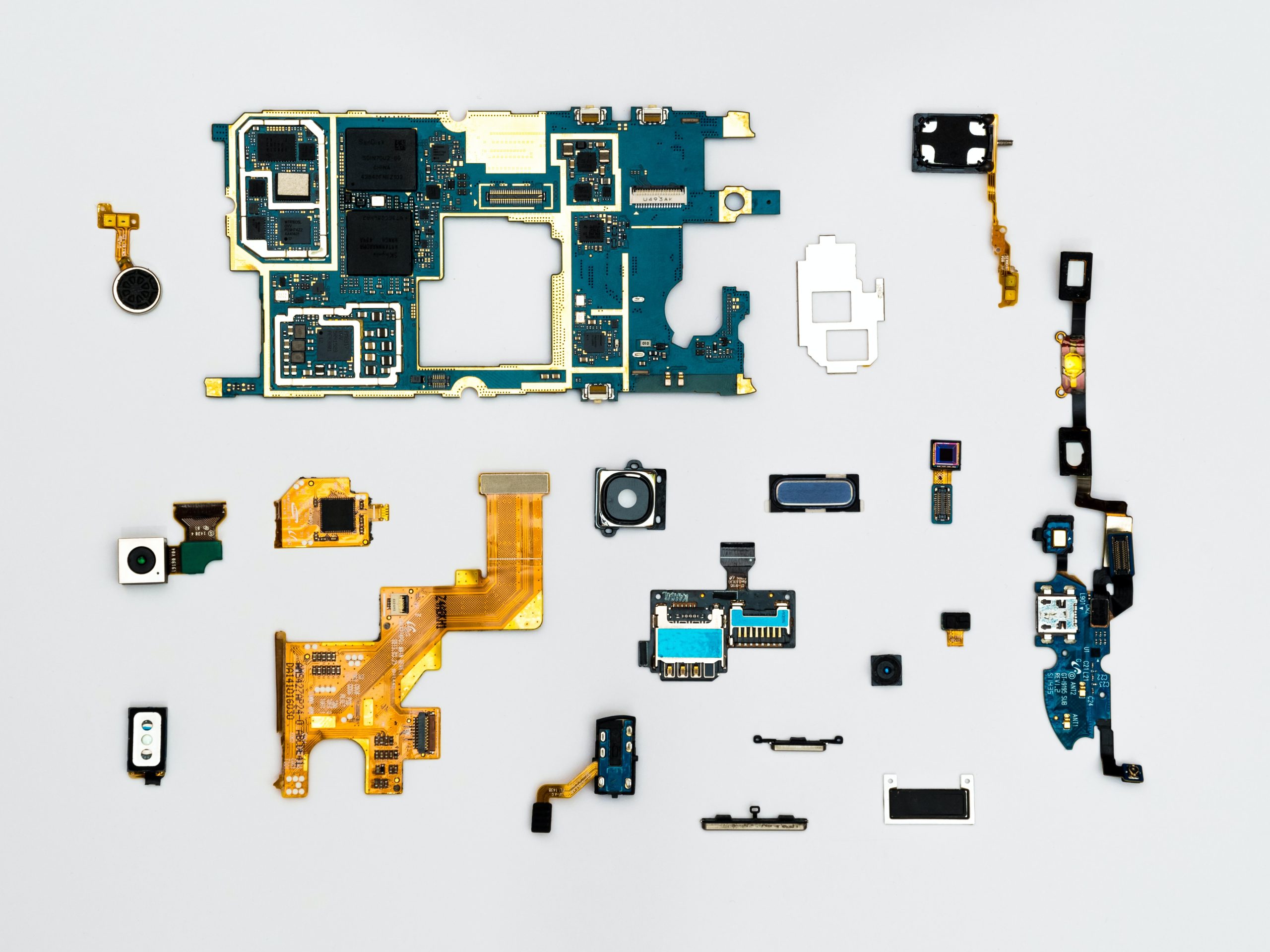

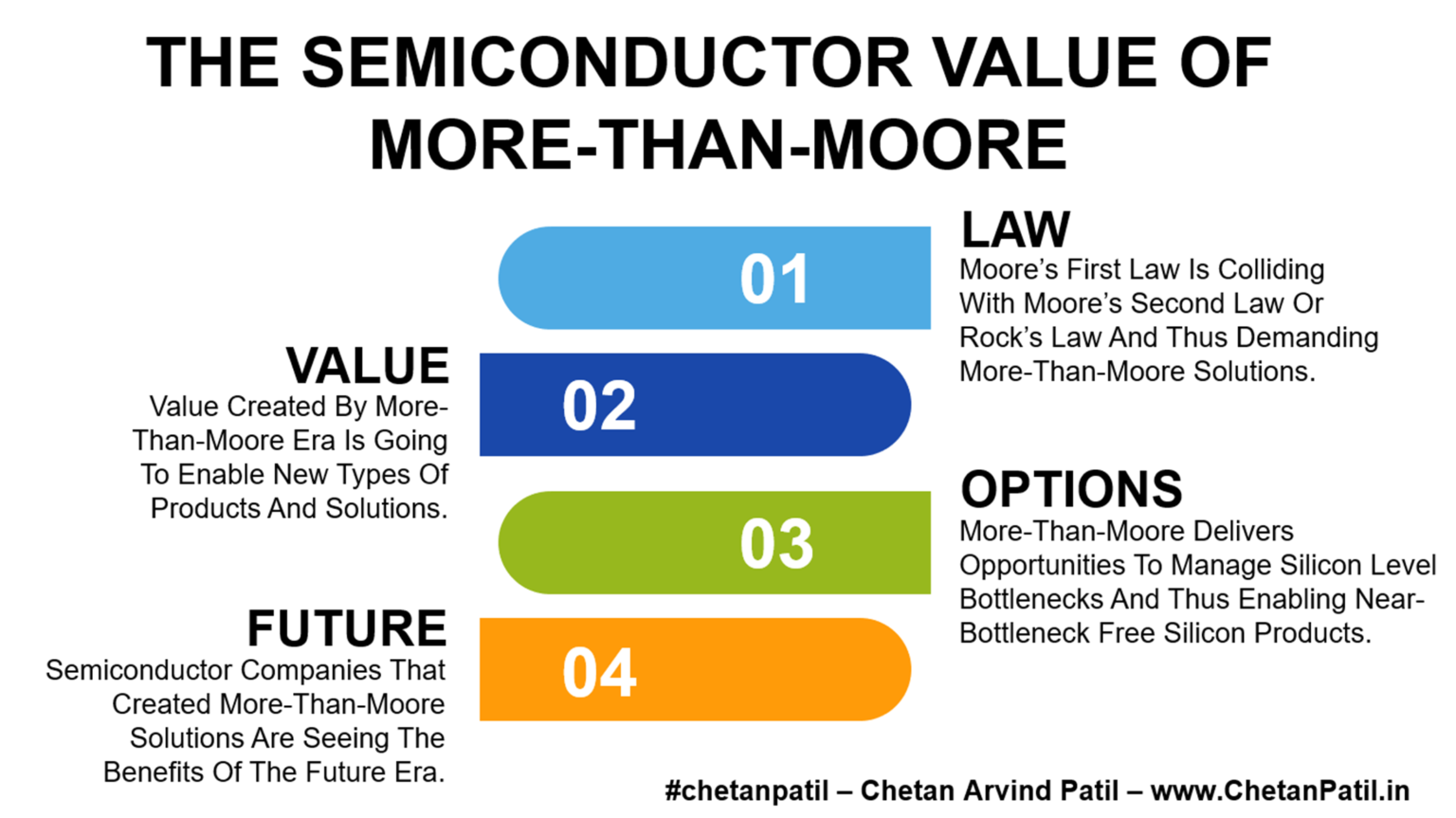

This turning point has given rise to what the industry calls More Than Moore approaches, strategies that rethink progress without relying solely on transistor scaling. Instead of building ever larger monolithic chips, engineers are turning to modular design, chiplets, multi-chip modules, and advanced packaging to push performance further. I explored this shift in The More Than Moore Semiconductor Roadmap, where I explained how mixing different chip types (SoC, MCM, SiP) can shrink board footprint, improve flexibility, and even enhance yield.

Of course, adopting chiplets comes with its challenges. As I discussed in The Hurdles For Semiconductor Chiplets, issues like high-speed interconnect complexity, the need for standard interfaces, and the slower-than-hoped pace of adoption have slowed their mainstream rollout. Encouragingly, some of these barriers are beginning to be addressed through industry-wide collaboration.

In Universal Chiplet Interconnect Express Will Speed Up Chiplet Adoption, I examined how open protocols like UCIe are laying the groundwork for interoperability between vendors, unlocking economies of scale that could make modular architectures the default choice in the years ahead.

Ultimately, the value of these innovations extends beyond just sidestepping Moore’s Law. As I highlighted in The Semiconductor Value Of More Than Moore, these approaches allow the industry to build chips that are tuned for specific workloads, balancing cost, performance, and power in ways traditional scaling never could.

In short, the post-Moore era is not about the end of progress, and it is about redefining what progress looks like, moving from chasing smaller transistors to engineering more intelligent systems.

Data-Centric Architectures Redefining Chip Design

As semiconductor industry shift away from Moore’s Law, another transformative trend is emerging: designing chips around data, not just arithmetic operations. In today’s landscape, raw compute is no longer the only king; what matters more is how quickly, efficiently, and intelligently data can be handled.

Data-centric architectures treat data flow and handling as the heartbeat of the system.

Rather than moving data through complex pipelines, these architectures embed processing where data lives, right in memory or near the sensors that generate it. This minimizes delays, slashes energy use, and magnifies performance.

In my post The Semiconductor Data Driven Decision Shift, I explored how data collected from fabrication, including inline metrology, critical dimensions, and yield analytics, is transforming design loops. The hardware must now be agile enough to feed, respond to, and benefit from data streams in real time.

Similarly, as covered in The Hybrid AI And Semiconductor Nexus, the convergence of AI and semiconductors is accelerating edge intelligence. When chips must support neural networks locally on mobile, IoT, or edge devices, the data-centric mindset demands memory hierarchies and compute structures that prioritize data movement over raw transistor counts.

Looking ahead, semiconductor industry (alongside computing industry) will see architectures that tightly couple storage and compute, such as near memory or in-memory computing, to process data where it resides. This is not theoretical, and industries already experimenting with these paradigms are seeing significant gains in AI workloads, graph analytics, and streaming data operations.

In essence, data-centric design reframes the challenge. Instead of asking “How many operations per second can an architecture perform?”, customer will now ask, “How smartly and swiftly can the silicon architecture handle data at scale?”

Yield Optimization As A Critical Success Factor

As semiconductor industry sharpen our focus on smarter, data-centric architectures, it becomes clear that progress is not just about innovative chip design, it is also about turning those designs into reality cost-effectively. That is where yield optimization comes in. It is the art and science of ensuring that as many chips as possible coming off the production line actually work, and do so reliably.

High yield is not just a technical win, and it is a business one, too. In The Economics Of Semiconductor Yield, I explored how yield directly impacts cost per chip, profit margins, and competitiveness. When yield climbs, manufacturers can lower prices, reinvest in innovation, and stay agile in rapidly shifting markets.

But yield is not something that magically appears. It must be managed. In The Semiconductor Smart Factory Basics, I examined how real-time data, such as wafer metrology and inline process metrics, can alert fabs to yield drifts early, allowing for proactive adjustments rather than costly reactive fixes.

Understanding why yield issues arise is just as essential. As discussed in The Semiconductor Technical Approach To Defect Pattern Analysis For Yield Enhancement, analyzing defect patterns, whether they are random or systematic, lets engineers pinpoint root causes of failures and fine-tune processes.

In short, yield optimization is the bridge from clever design to efficient production. When a chip’s architecture is data savvy but the fab process cannot reliably deliver functional units, everything falls apart. By embedding data-driven monitoring, agile control mechanisms, and targeted defect analysis into manufacturing, yield becomes the silent enabler of performance innovation.

Bridging Data And Yield To Enable Strategies For Future-Ready Chipmaking

From data-centric architectures to yield optimization, the next step is clear, and unite these forces within a single, forward-looking roadmap. Such a roadmap makes data and yield inseparable from the earliest design stages to high-volume manufacturing.

In The Semiconductor Learning Path: Build Your Own Roadmap Into The Industry, I outlined how understanding the whole value chain from design to manufacturing enables data-driven decisions that directly influence yield.

Disruptions like those in The Impact Of Semiconductor Equipment Shortage On Roadmap show why yield data and adaptive planning must be built in from the start. Real-time insights allow teams to adjust plans without losing competitiveness.

At the ecosystem level, India’s Roadmap To Semiconductor Productisation shows how aligning design, manufacturing, and policy can create resilient industries. Technical alignment is just as important. In The Need To Integrate Semiconductor Die And Package Roadmap, I explained why die and package planning must merge to optimise yield and performance.

Finally, the Semiconductor Foundry Roadmap Race illustrates how foundries are embedding yield and data feedback into their roadmaps, making them competitive assets rather than static plans.

Bridging data and yield within a cohesive roadmap turns chipmaking into a dynamic, feedback-driven process, essential for strategies that are truly future post-Moore era.

In summary, the Post-Moore era demands a different mindset. Progress is no longer a straight line of shrinking transistors, but a complex interplay of more innovative architectures, intelligent data handling, and disciplined manufacturing.

By uniting these elements through thoughtful roadmaps, both the computing and the semiconductor industry can continue delivering breakthroughs that meet the demands of AI, edge computing, and emerging applications. The path ahead will be shaped by those who can integrate design ingenuity, data-driven insight, and yield mastery into one continuous cycle of innovation.