Image Generated Using 4o

How DFT Evolved Beyond Test To Impact Reliability

In the early years of integrated circuit (IC) design, Design-for-Testability (DFT) was primarily introduced to improve manufacturing fault coverage and lower production test costs. Techniques such as scan chains, Built-In Self-Test (BIST), and boundary scans were developed to ensure that devices could be tested efficiently after fabrication. The goal was straightforward: detect manufacturing defects like stuck-at faults or shorts and maximize the number of good parts shipped.

Reliability, however, existed in a separate part of the development cycle. It focused on burn-in testing, life testing, and field failure analysis; activities typically performed long after the design phase had concluded. Early semiconductor technologies, with larger geometries and lower integration density, were far more tolerant of marginalities, allowing this separation between test and reliability efforts to function without significant consequences.

However, as the industry pushed into smaller nodes and began designing chips for automotive, medical, and aerospace applications, latent defects and marginal circuit behaviors became much harder to contain. The traditional DFT focus, catching only complex manufacturing faults, was insufficient. Subtle weaknesses introduced during fabrication could evolve into catastrophic failures after months or years of use in harsh real-world environments.

It became increasingly clear that DFT had to evolve. It was no longer just about passing production tests. It had to become a tool for reliability assurance, enabling early detection of life-limiting defects, supporting real-time health monitoring, and even allowing mechanisms for post-silicon repair.

From my experience, companies that recognized and embraced this expanded view of DFT, starting in the late 1990s and early 2000s, saw dramatic reductions in field returns and warranty failures, giving them a lasting advantage in high-reliability markets.

Techniques That Make DFT A Reliability Enabler

Modern Design-for-Testability (DFT) practices have evolved beyond providing basic test access. Today, DFT intentionally embeds structures and strategies directly contributing to early failure detection, ongoing health monitoring, and long-term reliability assurance. Some of the key techniques that have reshaped DFT’s role include:

Margin-Aware Testing: Contemporary DFT architectures are designed to detect functional faults and uncover marginal timing vulnerabilities. Techniques such as path delay fault testing, dynamic timing analysis, and voltage and temperature corner testing are now integrated into scan methodologies. These approaches help expose subtle risks like race conditions, timing slippage, and setup/hold margin failures that might otherwise surface only after prolonged field operation or under environmental stress.

Embedded Health Monitors: Modern ICs now embed a range of on-chip monitors to track critical reliability parameters in real-time. These include thermal sensors, voltage droop detectors, electromigration stress monitors, and aging sensors based on phenomena such as BTI (Bias Temperature Instability) and HCI (Hot Carrier Injection). By continuously observing these degradation mechanisms, the system can identify early warning signs of device wear-out before traditional end-of-life testing catches them.

Built-In Self-Repair (BISR): While BISR originated in memory arrays to allow the replacement of faulty rows or columns, its philosophy has expanded. Logic BISR concepts are now used to incorporate spare functional blocks, redundant paths, or self-reconfigurable circuits. These enable post-manufacture defect mitigation and even in-field dynamic recovery, which is necessary for high-availability and mission-critical applications like autonomous driving and aerospace systems.

Accelerated Degradation Detection: Instead of relying solely on lengthy burn-in processes, modern DFT includes stress-inducing scan patterns and high-activity test sequences designed to accelerate latent defect manifestation. Techniques such as elevated voltage toggling, thermal cycling stress patterns, and high-frequency clock strobing allow manufacturers to screen out devices at higher risk of early-life failure during final tests, significantly reducing the “infant mortality” tail in reliability distributions.

Each technique transforms DFT from a purely manufacturing-oriented tool into a cornerstone of predictive reliability engineering. In my direct experience across multiple technology nodes, products that integrated these advanced DFT capabilities consistently achieved twice the mission life compared to similar designs that treated DFT as a late-stage add-on.

The lesson is clear: DFT, designed with reliability in mind, becomes a silent but critical insurance policy for every IC leaving the factory.

Lessons Learned From Real-World Failures

There is no substitute for experience, especially the hard kind. In the semiconductor industry, field failures often reveal gaps that qualification testing alone cannot uncover. A standard failure mode seen across technologies, particularly in mission-critical applications, involves minor timing shifts and voltage droop effects not captured by nominal-condition scan testing.

These subtle issues may pass initial qualification yet surface under extreme environmental stresses, such as cold starts or wide voltage variations.

These cases highlight a critical truth: reliability-driven DFT must extend beyond validating basic functionality. It must be architected to validate timing margins, stress responses, and full-system robustness under real-world operating extremes. Without a margin-aware, environment-sensitive approach, latent vulnerabilities can remain hidden until the device is in the field, leading to costly returns, warranty claims, and potential safety risks.

Modern best practices now mandate that DFT strategies include corner-aware testing across full environmental ranges, embedded degradation monitors for voltage, temperature, and electromigration, and qualification-resilient test logic that does not become a new failure source itself.

DFT is no longer viewed as a mere checklist item or manufacturing tool. It is a fundamental mindset shift, treating every test structure and validation point as an active contributor to long-term product reliability and customer trust.

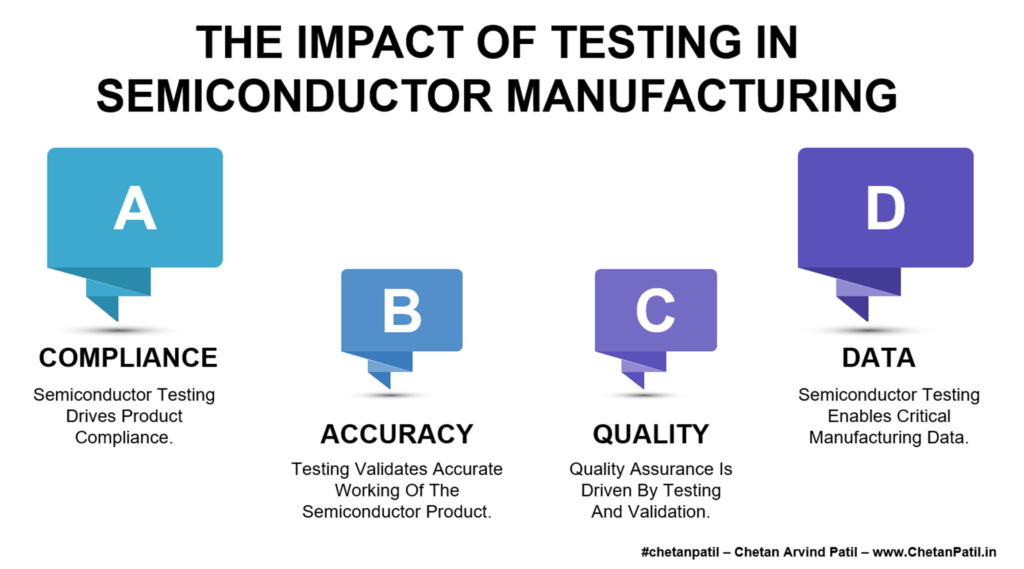

Best Practices To Align DFT And Reliability

Specific patterns have become clear after decades of trial and error and technical evolution. Teams that successfully use Design-for-Testability (DFT) to enhance IC reliability follow a deliberate and disciplined approach that starts early, embeds margin awareness, and treats DFT as an investment, not a burden.

Below is a summary of the best practices that consistently deliver results across complex and mission-critical applications.

| Practice | Key Focus |

|---|---|

| Start Early | Integrate DFT and reliability engineering during architectural planning, not after layout completion. |

| Test Margins, Not Just Logic | Validate path delays, power integrity, and signal integrity margins using dedicated DFT hooks. |

| Embed Monitors Thoughtfully | Place thermal sensors, voltage droop detectors, and electromigration monitors strategically at critical locations. |

| Plan For In-Field Visibility | Architect DFT structures that enable monitoring during system operation, not just at manufacturing test. |

| Stress Test Intelligently | Use stress-inducing scan patterns and built-in stress circuits to detect infant mortality risks early. |

| Treat DFT As A Reliability Asset | Shift mindset: view DFT as an insurance policy against field failures and warranty costs, not as overhead. |

As ICs move deeper into critical applications, from autonomous vehicles to implantable medical devices and AI accelerators, the relationship between DFT and reliability will no longer be a luxury or competitive advantage; it will be necessary for survival.

Those who design with this mindset will not only ship better silicon, they will build trust, longevity, and leadership in industries where failure is not an option.